One of many fastest-growing areas of know-how is machine studying, however even seasoned professionals often stumble over new phrases and jargon. It’s easy to get overwhelmed by the plethora of technical phrases as analysis hastens and new architectures, loss capabilities, and optimisation strategies seem.

This weblog article is your rigorously chosen reference to greater than fifty key and complex machine studying phrases. A few of these are broadly recognised, whereas others are not often outlined however have a big affect. With clear explanations and relatable examples, we dissect all the things from elementary concepts like overfitting and bias-variance tradeoff to revolutionary concepts like LoRA, Contrastive Loss, and One Cycle Coverage.

So, dive in and shock your self with what number of of those machine studying phrases you didn’t totally grasp till now.

Mannequin Coaching & Optimization

Foundational machine studying phrases that improve mannequin effectivity, stability, and convergence throughout coaching.

1. Curriculum Studying

A coaching strategy wherein extra advanced examples are progressively added to the mannequin after it has been uncovered to less complicated ones. This may improve convergence and generalisation by mimicking human studying.

Instance: Earlier than introducing noisy, low-quality photos, a digit classifier is skilled on clear, high-contrast photos.

It’s much like instructing a toddler to learn, by having them begin with fundamental three-letter phrases earlier than progressing to extra sophisticated sentences and paragraphs. This technique retains the mannequin from changing into disheartened or caught on difficult issues within the early levels of coaching. The mannequin can extra efficiently deal with tougher issues in a while by laying a powerful basis on easy concepts.

2. One Cycle Coverage

A studying fee schedule that reinforces convergence and coaching effectivity by beginning small, growing to a peak, after which lowering once more.

Instance: The training fee varies from 0.001 to 0.01 to 0.001 over completely different epochs.

This strategy is much like giving your mannequin a “warm-up, dash, and cool-down.” The mannequin can get its bearings with the low studying fee at first, study shortly and bypass suboptimal areas with the excessive fee within the center, and fine-tune its weights and settle right into a exact minimal with the ultimate lower. Fashions are steadily skilled extra shortly and with higher ultimate accuracy utilizing this cycle.

3. Lookahead Optimizer

Smoothes the optimisation path by wrapping round present optimisers and retaining slow-moving weights up to date primarily based on the path of the quick optimiser.

Instance: Lookahead + Adam ends in extra speedy and regular convergence.

Contemplate this as having a “fundamental military” (the gradual weights) that follows the final path the scout finds and a fast “scout” (the interior optimiser) that investigates the terrain forward. The military follows a extra secure, direct route, however the scout might zigzag. The mannequin converges extra persistently and the variance is decreased with this dual-speed technique.

4. Sharpness Conscious Minimization (SAM)

An optimisation technique that promotes fashions to converge to flatter minima, that are regarded as extra relevant to knowledge that hasn’t been seen but.

Instance: Leads to stronger fashions that perform properly with each take a look at and coaching knowledge.

Contemplate making an attempt to maintain a ball balanced in a valley. A broad, stage basin (a flat minimal) is way extra secure than a slim, sharp canyon (a pointy minimal). Throughout coaching, SAM actively seems to be for these broad basins, creating extra resilient fashions as a result of minor changes to the enter knowledge gained’t trigger the valley to break down.

5. Gradient Clipping

Stops the gradients from blowing up (rising too large) by capping them at a particular worth. In recurrent networks specifically, this ensures secure coaching.

Instance: To keep away from divergence throughout coaching, RNN gradients are clipped.

Contemplate capping the quantity of a shout. The mannequin’s response (the gradient) is stored inside an affordable vary no matter how surprising an error is. This stops the mannequin from updating its weights in an enormous, unstable approach, which is named “exploding gradients” and may completely damage the coaching course of.

6. Bayesian Optimization

A way for optimising capabilities which can be expensive to guage (comparable to hyperparameter tuning) by directing the search with probabilistic fashions.

Instance: Use fewer coaching runs to successfully decide the optimum studying fee.

When each trial run is extraordinarily expensive and gradual, it is a intelligent search technique for figuring out the best mannequin settings. Primarily based on the trials it has already performed, it creates a probabilistic “map” of the way it believes numerous settings will perform. It then makes an knowledgeable choice about the place to go looking subsequent utilizing this map, concentrating on areas that present promise and avoiding losing time on settings that it believes will carry out poorly.

Examine Bayesian Considering intimately right here.

7. Batch Renormalization

A variation of batch normalisation that fixes variations between batch and international statistics, making it extra secure when small batch sizes are used.

Instance: Assists in coaching fashions when the batch measurement is restricted to 4 by GPU reminiscence.

When batch normalisation can view a large, consultant assortment of examples without delay, it performs at its finest. Batch Renormalisation serves as a corrective lens when you possibly can solely use a small batch, modifying the statistics from the small group to extra intently resemble the looks of the whole dataset. When you find yourself pressured to make use of small batches because of {hardware} limitations, this helps stabilize coaching.

Regularization & Generalization

These are machine studying phrases that assist fashions generalise higher to unseen knowledge whereas avoiding overfitting and memorisation.

8. DropConnect

As an alternative of dropping whole neurons throughout coaching, as in Dropout, this regularisation method randomly drops particular person weights or connections between neurons.

Instance: A weight between two neurons is disabled throughout coaching, introducing robustness.

By deactivating particular person connections, DropConnect offers a extra granular technique than the broadly used Dropout method, which momentarily deactivates whole neurons. Contemplate a social community wherein DropConnect is much like randomly chopping particular person telephone strains between customers, whereas Dropout is much like telling particular customers to be silent. This retains the community from changing into overly depending on anybody connection and forces it to create extra redundant pathways.

9. Label Smoothing

A way of softening the labels throughout coaching to maintain the mannequin from rising overconfident. It offers the flawed courses a tiny portion of the chance mass.

Instance: Class A ought to be labelled 0.9 slightly than 1.0, and the others ought to be labelled 0.1.

The mannequin learns a bit of humility from this strategy. You ask it to be 99% sure a couple of prediction, acknowledging the very small risk that it could be incorrect, slightly than anticipating it to be 100% sure. Along with bettering the mannequin’s calibration and adaptableness to novel, unseen examples, this stops the mannequin from making wildly optimistic predictions.

10. Digital Adversarial Coaching

Provides tiny modifications to inputs throughout coaching to regularise predictions, growing the robustness of the mannequin.

Instance: To enhance classification stability, add delicate noise to pictures.

This technique works equally to a sparring accomplice who repeatedly nudges you in your weak areas to strengthen you. The mannequin is skilled to be immune to that exact change after figuring out which path a small change within the enter would most definitely have an effect on the mannequin’s prediction. In consequence, the mannequin is extra dependable and fewer prone to being tricked by erratic or noisy real-world knowledge.

11. Elastic Weight Consolidation (EWC)

A regularisation method that penalises important weights from altering excessively as a way to keep data of prior duties.

Instance: As you study new duties, you don’t overlook outdated ones.

Contemplate an individual who has mastered the guitar and is now studying to play the piano. By recognising the important “muscle reminiscence” (essential weights) from the guitar job, EWC serves as a reminiscence support. It makes switching between these explicit weights tougher when l8809earning the piano, sustaining the outdated talent whereas enabling the acquisition of latest ones.

12. Spectral Normalization

A way to extend coaching stability in neural networks by constraining the spectral norm of weight matrices.

Instance: Lipschitz constraints are utilized in GAN discriminators to offer extra secure adversarial coaching.

Contemplate this as setting limits on how shortly your mannequin can alter its behaviour. Spectral normalisation retains the coaching from changing into chaotic or unstable by regulating the “most amplification” that every layer can apply.

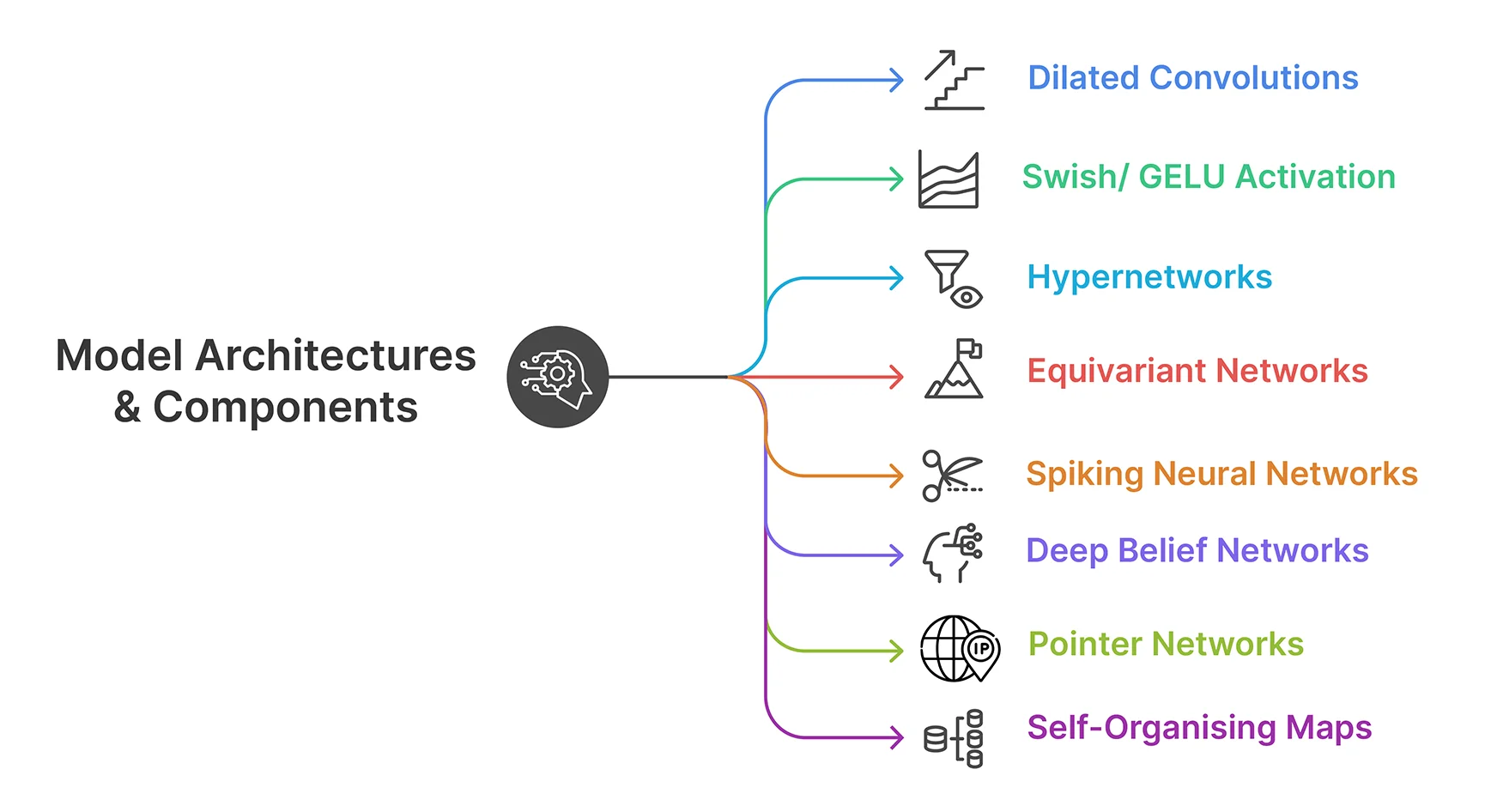

Mannequin Architectures & Parts

This part introduces superior machine studying phrases associated to how neural networks are structured and the way they course of info.

13. Dilated Convolutions

Networks can have a wider receptive subject with out including extra parameters because of convolution operations that create gaps (dilations) between kernel components.

Instance: WaveNet is utilized in audio era to file long-range dependencies.

That is much like having a community that doesn’t require bigger eyes or ears to “see” a bigger portion of a picture or “hear” an extended audio clip. The convolution can seize extra context and canopy extra floor with the identical computational price by spreading out its kernel. It’s much like taking bigger steps to achieve a faster understanding of the large image.

14. Swish/ GELU Activation

Smoother and extra differentiable than ReLU, superior activation capabilities assist in convergence and efficiency in deeper fashions.

Instance: EfficientNet makes use of Swish for elevated accuracy, whereas BERT makes use of GELU.

Swish and GELU are much like dimmer switches if ReLU is a fundamental on/off gentle swap. In distinction to ReLU’s sharp nook, their clean curves facilitate gradient circulation throughout backpropagation, which stabilises the coaching course of. This minor adjustment facilitates extra fluid info processing, which steadily improves ultimate accuracy.

15. Hypernetworks

Dynamic and conditional mannequin architectures are made doable by neural networks that produce the weights of different neural networks.

Instance: MetaNet creates layer weights for numerous duties dynamically.

Contemplate a “grasp community” that serves as a manufacturing facility, producing the weights for a definite “employee community,” slightly than fixing issues by itself. This lets you shortly develop customised employee fashions which can be suited to explicit duties or inputs. It’s an efficient technique for growing the adaptability and suppleness of fashions.

16. Equivariant Networks

Predictive networks that protect symmetry properties (comparable to translation or rotation) are useful in scientific fields.

Instance: Rotation-equivariant CNNs are employed in medical imaging and 3D object recognition.

The structure of those networks incorporates elementary symmetries, such because the legal guidelines of physics. For instance, a rotation-equivariant community is not going to alter its prediction as a result of it understands {that a} molecule stays the identical even when it’s rotated in area. For scientific knowledge the place these symmetries are important, this makes them extraordinarily correct and environment friendly.

17. Spiking Neural Networks

This type of neural community transmits info utilizing discrete occasions (spikes) slightly than steady values, extra like organic neurons.

Instance: It’s utilised in energy-efficient {hardware} for functions comparable to real-time sensory processing.

Much like how our personal neurons fireplace, SNNs talk in short, sharp bursts slightly than in a steady hum of data.

18. Deep Perception Networks

A category of deep neural community, or generative graphical mannequin, is made up of a number of layers of latent variables (also referred to as “hidden models”), with connections between the layers however not between models inside every layer.

Instance: Deep neural networks are pre-trained utilizing this technique.

It resembles a stack of pancakes, with every pancake standing for a definite diploma of knowledge abstraction.

19. Pointer Networks

A selected type of neural community that may be skilled to establish a specific sequence factor.

Instance: Utilized to resolve points such because the travelling salesman downside, wherein figuring out the quickest path between a bunch of cities is the intention.

Similar to having a GPS that may point out the following flip at each intersection is that this analogy.

20. Self-Organising Maps

A type of unsupervised neural community that creates a discretised, low-dimensional illustration of the coaching samples’ enter area.

Instance: Used to show high-dimensional knowledge in a approach that makes its underlying construction seen.

It’s much like assembling a set of tiles right into a mosaic, the place every tile stands for a definite side of the unique image.

Knowledge Dealing with & Augmentation

Study machine studying phrases centered on getting ready, managing, and enriching coaching knowledge to spice up mannequin efficiency.

21. Mixup Coaching

An strategy to knowledge augmentation that smoothes the choice boundaries and lessens overfitting by interpolating two photos and their labels to supply artificial coaching samples.

Instance: A brand new picture with a label that displays the identical combine is made up of 70% canine and 30% cat.

By utilizing this technique, the mannequin learns that issues aren’t at all times black and white. The mannequin learns to make much less sure predictions and foster a extra seamless transition between classes by being proven blended examples. This retains the mannequin from overestimating itself and improves its capability to generalise to new, probably ambiguous knowledge.

22. Characteristic Retailer

A centralised system for crew and undertaking administration, ML characteristic serving, and reuse.

Instance: Save and utilise the “person age bucket” for numerous fashions.

Contemplate a characteristic retailer as a high-quality, communal pantry for knowledge scientists. They’ll pull dependable, pre-processed, and documented options from the central retailer slightly than having every prepare dinner (knowledge scientist) make their very own elements (options) from scratch for every meal (mannequin). This ensures uniformity all through an organisation, minimises errors, and saves a ton of redundant work.

23. Batch Impact

Systematic technical variations that may confuse evaluation outcomes between batches of knowledge.

Instance: Gene expression knowledge processed on numerous days reveals constant variations unrelated to biology.

Think about this as a number of photographers taking photos of the identical scene with completely different cameras. Technical variations in tools produce systematic variations that require correction, although the topic is similar.

Analysis, Interpretability, & Explainability

These machine studying phrases assist quantify mannequin accuracy and supply insights into how and why predictions are made.

24. Cohen’s Kappa

A statistical metric that takes into consideration the potential of two classifiers or raters agreeing by likelihood.

Instance: Kappa accounts for random settlement and could also be decrease even when two medical doctors agree 85% of the time.

This metric assesses “true settlement” past what can be predicted by likelihood alone. Two fashions can have excessive uncooked settlement in the event that they each classify 90% of things as “Class A,” however Kappa corrects for the truth that they might have agreed significantly if that they had merely persistently guessed “Class A.” “How a lot are the raters actually in sync, past random likelihood?” is the query it addresses.

25. Brier Rating

Calculates the imply squared distinction between anticipated possibilities and precise outcomes to evaluate how correct probabilistic predictions are.

Instance: A mannequin with extra precisely calibrated possibilities will rating decrease on the Brier scale.

This rating evaluates a forecaster’s long-term dependability. A excessive Brier rating signifies that, on common, rain fell roughly 70% of the time when a climate mannequin predicted a 70% likelihood of rain. It incentivises truthfulness and precision in chance calculations.

26. Counterfactual Explanations

Clarify how a special mannequin prediction might consequence from altering the enter options.

Instance: A person’s mortgage can be granted if their revenue was $50,000 slightly than $30,000.

This strategy offers a proof of a call by answering the “what if” query. It gives a tangible, doable various slightly than merely stating the consequence. “Your mortgage would have been authorised in case your down fee had been $5,000 greater,” it’d state in response to a denied mortgage software. The logic of the mannequin turns into clear and intelligible consequently.

27. Anchors

Excessive-precision, simple guidelines that, in some circumstances, guarantee a prediction.

Instance: “All the time approve a mortgage if the borrower is older than 60 and earns greater than $80,000.”

Anchors give a mannequin’s prediction a “secure zone” of clear, uncomplicated guidelines. They pinpoint a slim vary of circumstances wherein the mannequin behaves in a hard and fast and predictable method. Regardless of the complexity of the mannequin’s total behaviour, this gives a exact, extremely dependable clarification for why a specific prediction was made.

28. Built-in Gradients

An attribution method that integrates gradients alongside the enter path to find out the corresponding contribution of every enter characteristic to a prediction.

Instance: Signifies which pixels had the best affect on a picture’s classification. Considerably much like what GradCAM does.

In essence, this technique produces a “warmth map” of the enter options’ relative significance. It identifies the exact pixels that the mannequin “checked out” as a way to classify a picture, comparable to a cat’s whiskers and pointed ears. It might probably reveal the phrases that had the largest affect on the sentiment of a textual content choice.

29. Out of Distribution Detection

Discovering inputs that differ from the info used to coach a mannequin.

Instance: The digicam system of a self-driving automotive ought to be capable of recognise when it’s seeing a wholly completely different type of object that it has by no means seen earlier than.

An analogy can be a top quality management inspector on an meeting line looking for items which can be completely completely different from what they need to be.

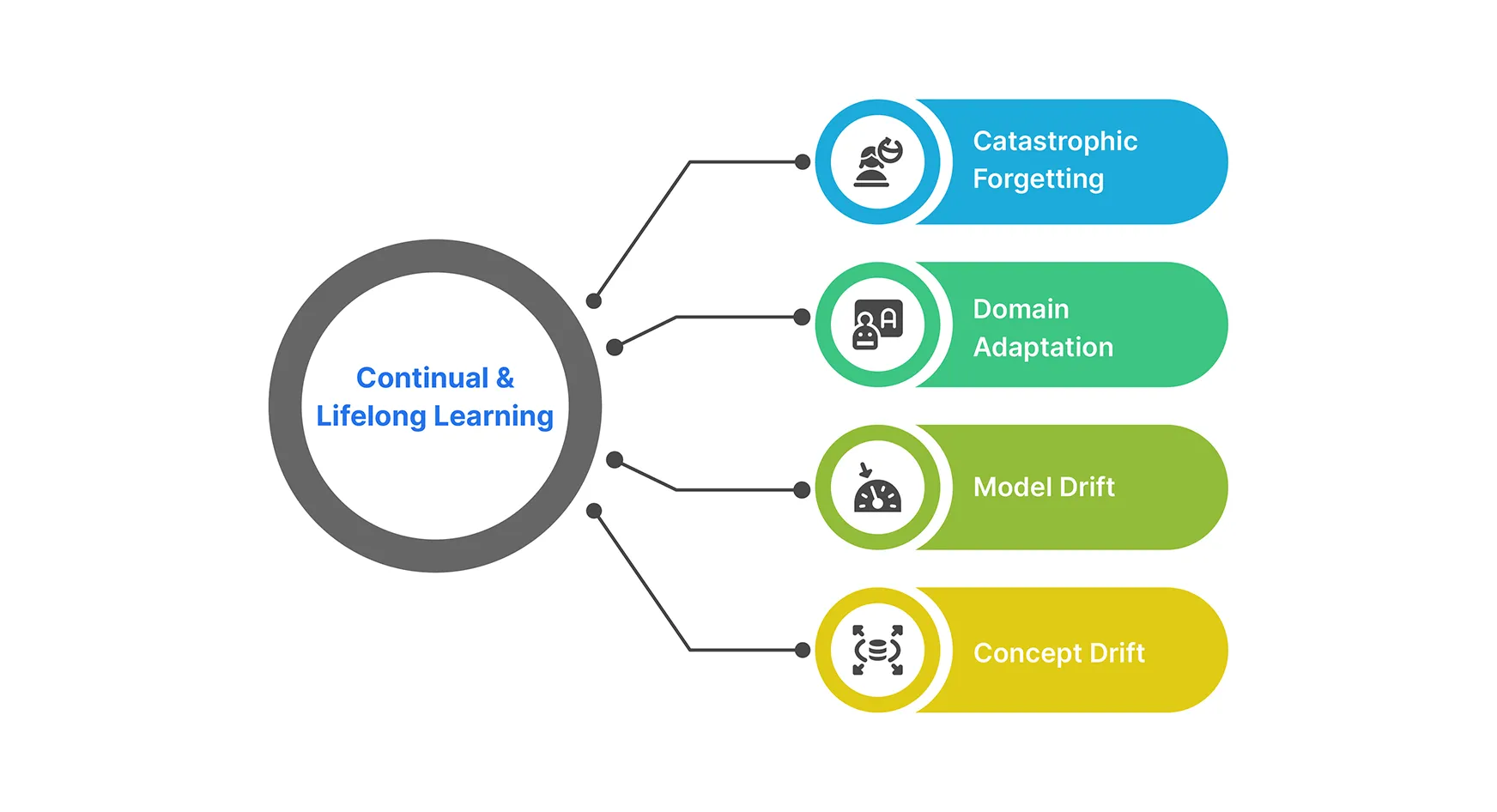

Continuous & Lifelong Studying

This half explains machine studying phrases related to fashions that adapt over time with out forgetting beforehand discovered duties.

30. Catastrophic Forgetting

A state of affairs the place a mannequin is skilled on new duties after which forgets what it has already learnt. It is a important impediment to lifelong studying and is especially widespread in sequential studying.

Instance: After being retrained to recognise automobiles, a mannequin that was skilled to recognise animals fully forgets them.

This happens on account of the mannequin changing the community weights that held the earlier knowledge with the brand new weights required for the brand new job. It’s similar to how somebody who has spoken solely their native tongue for years might overlook a language they learnt in highschool. Growing AI that may repeatedly study new issues with out requiring retraining on all the things it has ever seen is extraordinarily troublesome.

31. Area Adaptation

The problem of adapting a mannequin skilled on a supply knowledge distribution to a special however associated goal knowledge distribution is addressed on this space of machine studying.

Instance: It could be essential to change a spam filter that was skilled on emails from one organisation to ensure that it to perform correctly on emails from one other.

An analogy can be a translator who speaks one dialect of a language properly however must study one other.

32. Mannequin Drift

Happens when a mannequin’s efficiency deteriorates over time on account of shifting enter knowledge distributions.

Instance: E-commerce recommender fashions are impacted by modifications in client behaviour following the COVID-19 pandemic.

At this level, a once-accurate mannequin loses its relevance as a result of the surroundings it was skilled in has advanced. It’s much like attempting to navigate a metropolis in 2025 utilizing a map from 2019; whereas the map is correct, new roads have been constructed and outdated ones have been closed. To remain updated, manufacturing fashions must be repeatedly checked for drift and retrained utilizing contemporary knowledge.

33. Idea Drift

This phenomenon happens when the goal variable’s statistical traits, which the mannequin is making an attempt to forecast, alter over time in surprising methods.

Instance: As buyer behaviour evolves over time, a mannequin that forecasts buyer attrition might lose accuracy.

An analogy can be making an attempt to navigate a metropolis utilizing an outdated map. The map might not be as useful as a result of the streets and landmarks have modified.

Loss Features & Distance Metrics

These machine studying phrases outline how mannequin predictions are evaluated and in comparison with precise outcomes.

34. Contrastive Studying

Pushes dissimilar knowledge aside and encourages representations of comparable knowledge to be nearer collectively in latent area.

Instance: SimCLR compares pairs of augmented photos to study representations. CLIP additionally makes use of this logic.

This capabilities equally to an AI “spot the distinction” recreation. A picture (the “anchor”), a barely modified model of it (the “optimistic”), and a wholly completely different picture (the “unfavourable”) are introduced to the mannequin. Its objective is to study to tug the anchor and optimistic nearer collectively whereas pushing the unfavourable far-off, successfully studying what makes a picture distinctive.

35. Triplet Loss

With a view to practice fashions to embed comparable inputs nearer collectively and dissimilar inputs farther aside in a learnt area, a loss perform is utilised.

Instance: A face recognition system’s mannequin is skilled to maximise the space between two photos of the identical particular person and maximise the space between two photos of various individuals.

Placing books by the identical writer subsequent to one another and books by completely different authors on completely different cabinets is analogous to arranging your bookshelf.

36. Wasserstein Distance

Extra important distances than KL divergence are offered by a metric that calculates the “price” of fixing one chance distribution into one other.

Instance: Wasserstein GANs use it to provide coaching gradients higher stability.

Contemplate this to be the least quantity of labor required to maneuver one sand pile to match the form of one other. The “transport price” of transferring chance mass round is taken under consideration by the Wasserstein distance, in distinction to different distance measures.

Superior Ideas & Idea

These are high-level machine studying phrases that underpin cutting-edge analysis and theoretical breakthroughs.

37. Lottery Ticket Speculation

Proposes that there’s a smaller, correctly initialised subnet (a “profitable ticket”) inside a bigger, overparameterized neural community that may be skilled individually to attain comparable efficiency.

Instance: Excessive accuracy could be achieved by coaching a small portion of a pruned ResNet50 from scratch.

Think about an enormous, randomly distributed community as an enormous lottery pool. Based on the speculation, a small, flawlessly organised sub-network the “profitable ticket” has been hid from the beginning. Discovering this distinctive subnet will assist you to save a ton of computation by coaching simply that subnet and getting the identical incredible outcomes as coaching the whole huge community. The most important impediment, although, is developing with a sensible solution to find this “profitable ticket.”

38. Meta Studying

This course of, generally known as “studying to study,” entails instructing a mannequin to quickly modify to new duties with little knowledge.

Instance: MAML makes it doable to shortly modify to novel picture recognition duties.

The mannequin learns the final means of studying slightly than a single job. It’s much like instructing a pupil to study extraordinarily shortly in order that they will grasp a brand new topic (job) with minimal research supplies (knowledge). To realize this, the mannequin is skilled on a broad vary of studying duties.

39. Neural Tangent Kernel

A theoretical framework that provides insights into generalisation by inspecting the educational dynamics of infinitely extensive neural networks.

Instance: It facilitates the evaluation of deep networks’ coaching behaviour within the absence of precise coaching.

NTK is a strong mathematical device that hyperlinks deep studying to extra conventional, well-understood kernel strategies. It permits researchers to make correct theoretical claims in regards to the studying means of very extensive neural networks and the explanations behind their generalisation to new knowledge. It gives a fast solution to comprehend the dynamics of deep studying with out requiring expensive coaching experiments.

40. Manifold Studying

Discovering a low-dimensional illustration of high-dimensional knowledge whereas sustaining the info’s geometric construction is the objective of this class of unsupervised studying algorithms.

Instance: To realize a greater understanding of the construction of a high-dimensional dataset, visualize it in two or three dimensions.

It’s like making a flat map of the Earth. You’re representing a three-dimensional object in two dimensions, however you’re attempting to protect the relative distances and shapes of the continents.

41. Disentagled Illustration

A type of illustration studying wherein the options which can be learnt match distinctive, understandable elements of variation within the knowledge.

Instance: A mannequin that learns to depict faces might have distinct options for facial features, eye color, and hair color.

Similar to a set of sliders, it means that you can modify numerous points of a picture, together with its saturation, distinction, and brightness.

42. Gumbel-Softmax Trick

Gradient-based optimisation utilizing discrete selections is made doable by this differentiable approximation of sampling from a categorical distribution.

Instance: Variational autoencoders are skilled end-to-end utilizing categorical latent variables in discrete latent variable fashions. Getting a “comfortable gradient” which you can nonetheless practice by is much like rolling a weighted cube.

This method produces a clean approximation that seems discrete however remains to be differentiable, permitting backpropagation by sampling operations, versus making troublesome discrete selections that block gradients.

43. Denoising Rating Matching

A way for estimating the gradient of the log-density (rating perform) by mannequin coaching as a way to study chance distributions.

Instance: Rating matching is utilized by diffusion fashions to discover ways to reverse the noise course of and produce new samples.

That is much like studying “push” every pixel in the correct path to make a messy picture cleaner. You study the gradient subject pointing in the direction of greater chance areas slightly than immediately modelling possibilities.

Deployment & Manufacturing

This part focuses on machine studying phrases that guarantee fashions run effectively, reliably, and safely in real-world environments.

44. Shadow Deployment

An strategy used for silent testing wherein a brand new mannequin is applied concurrently with the prevailing one with out affecting finish customers.

Instance: Danger-free mannequin high quality testing in manufacturing.

Having a trainee pilot fly a aircraft in a simulator that receives real-time flight knowledge however whose actions don’t truly management the plane is analogous to this. You may take a look at the brand new mannequin’s efficiency on real-world knowledge with out endangering customers as a result of the system can file its predictions and examine them to the selections made by the stay mannequin.

45. Serving Latency

Serving Latency is how lengthy it takes for a mannequin that has been deployed to supply a prediction. In real-time programs, low latency is crucial.

Additionally learn: From 10s to 2s: Full p95 Latency Discount Roadmap Utilizing Cloud Run and Redis

Instance: A voice assistant wants a mannequin response of lower than 50 ms.

That is the period of time that passes between posing a question to the mannequin and getting a response. Velocity is simply as essential as accuracy in lots of real-world functions, like language translation, on-line advert bidding, and fraud detection. Low latency is an important prerequisite for deployment since a prediction that comes too late is steadily nugatory.

Probabilistic & Generative Strategies

Discover machine studying phrases that cope with uncertainty modelling and the era of latest, data-like samples by probabilistic strategies.

46. Variational Inferences

An approximate method that makes use of optimization over distributions as a substitute of sampling to hold out Bayesian inference.

Instance: A probabilistic latent area is learnt in VAEs.

For chance issues which can be too troublesome to compute exactly, it is a helpful mathematical shortcut. Quite than making an attempt to find out the exact, intricate kind of the particular chance distribution, it determines that the perfect approximation is a less complicated, easier-to-manage distribution (comparable to a bell curve). This transforms an unsolvable computation right into a manageable optimisation situation.

47. Monte Carlo Dropout

A way for estimating prediction uncertainty that entails averaging predictions over a number of ahead passes and making use of dropout at inference time.

Instance: To acquire uncertainty estimates, make a number of predictions in regards to the tumour chance.

By sustaining dropout, which is often solely energetic throughout coaching, at prediction time, this technique transforms a normal community right into a probabilistic one. You may receive quite a lot of marginally completely different outputs by passing the identical enter by the mannequin 30 or 50 instances. You may get a dependable estimate of the mannequin’s prediction uncertainty from the distribution of those outputs.

48. Information Distillation

A compression technique that makes use of softened outputs to show a smaller “pupil” mannequin to mimic a bigger “trainer” mannequin.

Instance: Quite than utilizing arduous labels, the scholar learns from comfortable class possibilities.

Contemplate an apprentice (the small pupil mannequin) being taught by a grasp craftsman (the big trainer mannequin). Along with displaying the ultimate proper response, the grasp offers an in depth “why” (e.g., “this seems to be 80% like a canine, nevertheless it has some cat-like options”). The comfortable possibilities’ further info significantly helps the smaller pupil mannequin in studying the identical intricate reasoning.

You may learn all about Distilled Fashions right here.

49. Normalizing Flows

To transform a easy chance distribution into a fancy one for generative modelling, use a collection of invertible capabilities.

Instance: Glow creates wonderful photos by utilizing normalising flows.

Contemplate these as a set of mathematical prisms that may stretch, bend, and twist a fundamental form, comparable to a homogeneous blob of clay, right into a extremely intricate sculpture, such because the distribution of faces in the actual world, by making use of a collection of reversible transformations. They can be utilized to find out the exact chance of present knowledge in addition to to create new knowledge as a result of every step is totally reversible.

50. Causal Inference

A department of machine studying and statistics that focuses on determining the causal relationships between variables.

Instance: Determining if a brand new advertising marketing campaign genuinely elevated gross sales or if it was merely a coincidence.

The distinction between understanding that roosters crow when the solar rises and understanding that the solar doesn’t rise on account of the rooster’s crow is analogous.

51. Dynamic Time Warping

An algorithm that finds the perfect alignment between temporal sequences which may differ in timing or pace as a way to measure similarity.

Instance: Evaluating two speech indicators with various speeds or matching up monetary time collection with numerous seasonal developments.

Much like matching the notes of two songs sung at completely different tempos. You may examine sequences even when timing differs significantly as a result of DTW compresses and stretches the time axis to seek out the optimum alignment.

Conclusion

It takes extra than simply studying definitions to grasp these 50+ machine studying phrases; it additionally requires growing an understanding of how up to date ML programs are developed, skilled, optimized, and applied.

These ideas spotlight the intricacy and great thing about the programs we cope with each day, from how fashions study (One Cycle Coverage, Curriculum Studying), how they generalize (Label Smoothing, Knowledge Augmentation), and even how they behave badly (Knowledge Leakage, Mode Collapse).

Whether or not you’re studying a analysis paper, growing your subsequent mannequin, or troubleshooting surprising outcomes, let this glossary of machine studying phrases function a psychological highway map that can assist you navigate the continually altering subject.

Login to proceed studying and revel in expert-curated content material.