If in case you have simply began to be taught machine studying, likelihood is you have got already heard a couple of Resolution Tree. When you might not presently concentrate on its working, know that you’ve got positively used it in some type or the opposite. Resolution Timber have lengthy powered the backend of among the hottest companies obtainable globally. Whereas there are higher alternate options obtainable now, determination timber nonetheless maintain their significance on the planet of machine studying.

To present you a context, a call tree is a supervised machine studying algorithm used for each classification and regression duties. Resolution tree evaluation includes totally different selections and their doable outcomes, which assist make selections simply based mostly on sure standards, as we’ll focus on later on this weblog.

On this article, we’ll undergo what determination timber are in machine studying, how the choice tree algorithm works, their benefits and downsides, and their functions.

What’s Resolution Tree?

A call tree is a non-parametric machine studying algorithm, which signifies that it makes no assumptions concerning the relationship between enter options and the goal variable. Resolution timber can be utilized for classification and regression issues. A call tree resembles a circulate chart with a hierarchical tree construction consisting of:

- Root node

- Branches

- Inner nodes

- Leaf nodes

Forms of Resolution Timber

There are two totally different sorts of determination timber: classification and regression timber. These are generally each referred to as CART (Classification and Regression Timber). We are going to discuss each briefly on this part.

- Classification Timber: A classification tree predicts categorical outcomes. Which means that it classifies the information into classes. The tree will then guess which class the brand new pattern belongs in. For instance, a classification tree might output whether or not an e mail is “Spam” or “Not Spam” based mostly on the options of the sender, topic and content material.

- Regression Timber: A regression tree is used when the goal variable is steady. This implies predicting a numerical worth versus a categorical worth. That is accomplished by averaging the values of that leaf. For instance, a regression tree might predict the very best worth of a home; the options may very well be dimension, space, variety of bedrooms, and placement.

This algorithm sometimes makes use of ‘Gini impurity’ or ‘Entropy’ to determine the best attribute for a node cut up. Gini impurity measures how typically a randomly chosen attribute is misclassified. The decrease the worth, the higher the cut up might be for that attribute. Entropy is a measure of dysfunction or randomness within the dataset, so the decrease the worth of entropy for an attribute, the extra fascinating it’s for tree cut up, and can result in extra predictable splits.

Equally, in follow, we’ll select the kind through the use of both DecisionTreeClassifier or DecisionTreeRegressor for classification and regression:

from sklearn.tree import DecisionTreeClassifier, DecisionTreeRegressor

# Instance classifier (e.g., predict emails are spam or not)

clf = DecisionTreeClassifier(max_depth=3, random_state=42)

# Instance regressor (e.g., predict home costs)

reg = DecisionTreeRegressor(max_depth=3)Info Acquire and Gini Index in Resolution Tree

Thus far, we’ve got mentioned the fundamental instinct and method of how a call tree works. So, now let’s focus on the choice measures of the choice tree, which finally assist in deciding on the best node for the splitting course of. For that, we’ve got two widespread approaches we’ll focus on beneath:

1. Info Acquire

Info Acquire is the measure of effectiveness of a specific attribute in decreasing the entropy within the dataset. This helps in deciding on essentially the most informative options for splitting the information, resulting in a extra correct & environment friendly mannequin.

So, suppose S is a set of cases and A is an attribute. Sv is the subset of S, and V represents a person worth of that attribute. A can take one worth from the set of (A), which is the set of all doable values for that attribute.

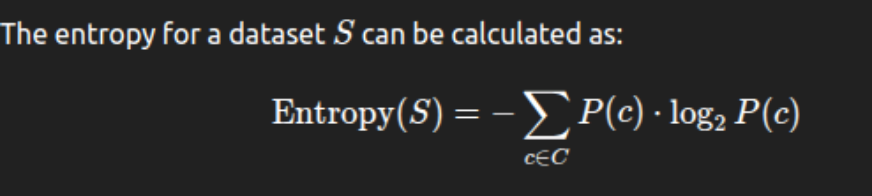

Entropy: Within the context of determination timber, entropy is the measure of dysfunction or randomness within the dataset. It’s most when the courses are evenly distributed and reduces when the distribution turns into extra homogeneous. So, a node with low entropy means courses are largely related or pure inside that node.

The place P(c) is the likelihood of courses within the set S and C is the set of all courses.

Instance: If we need to resolve whether or not to play tennis or not based mostly on the climate circumstances: Outlook and Temperature.

Outlook has 3 values: Sunny, Overcast, Rain

Temperature has 3 values: Sizzling, Gentle, Chilly, and

Play Tennis consequence has 2 values: Sure or No.

| Outlook | Play Tennis | Rely |

|---|---|---|

| Sunny | No | 3 |

| Sunny | Sure | 2 |

| Overcast | Sure | 4 |

| Rain | No | 1 |

| Rain | Sure | 4 |

Calculating Info Acquire

Now we’ll calculate the Info when the cut up is predicated on Outlook.

Step 1: Entropy of Whole Dataset S

So, the overall variety of cases in S is 14, and their distribution is:

The entropy of S might be:

Entropy(S) = -(9/14 log2(9/14) + 5/14 log2(5/14) = 0.94

Step 2: Entropy for the subset based mostly on outlook

Now, let’s break the information factors into subsets based mostly on the Outlook distribution, so:

Sunny (5 information: 2 Sure and three No):

Entropy(Sunny)= -(⅖ log2(⅖)+ ⅗ log2(⅗)) =0.97

Overcast (4 information: 4 Sure, 0 No):

Entropy(Overcast) = 0 (because it’s a pure attribute, as all values are the identical)

Rain (5 information: 4 Sure, 1 No):

Entropy(Rain) = -(⅘ log2(⅘)+ ⅕ log2(⅕)) = 0.72

Step 3: Calculate Info Acquire

Now we’ll calculate info acquire based mostly on outlook:

Acquire(S,Outlook) = Entropy(S) – (5/14 * Entropy(Sunny) + 4/14 * Entropy(Overcast) + 5/14 * Entropy(Rain))

Acquire(S,Outlook) = 0.94-(5/14 * 0.97+ 4/14 * 0+ 5/14 * 0.72) = 0.94-0.603=0.337

So the Info Acquire for the Outlook attribute is 0.337

The Outlook attribute right here signifies it’s considerably efficient in deriving the answer. Nevertheless, it nonetheless leaves some uncertainty about the proper consequence.

2. Gini Index

Identical to Info Acquire, the Gini Index is used to resolve the most effective function for splitting the information, but it surely operates in another way. Gini Index is a metric to measure how typically a randomly chosen component can be incorrectly recognized or impure (how combined the courses are in a subset of knowledge). So, the upper the worth of the Gini Index for an attribute, the much less seemingly it’s to be chosen for the information cut up. Subsequently, an attribute with a better Gini index worth is most well-liked in such determination timber.

The place:

m is the variety of courses within the dataset and

P(i) is the likelihood of sophistication i within the dataset S.

For instance, if we’ve got a binary classification downside with courses “Sure” and “No”, then the likelihood of every class is the fraction of cases in every class. The Gini Index ranges from 0, as completely pure, and 0.5, as most impurity for binary classification.

Subsequently, Gini=0 signifies that all cases within the subset belong to the identical class, and Gini=0.5 means; the cases are equal proportions of all courses.

Instance: If we need to resolve whether or not to play tennis or not based mostly on the climate circumstances: Outlook, and Temperature.

Outlook has 3 values: Sunny, Overcast, Rain

Temperature has 3 values: Sizzling, Gentle, Chilly, and

Play Tennis consequence has 2 values: Sure or No.

|

Outlook

|

Play Tennis

|

Rely

|

|

Sunny

|

No

|

3

|

|

Sunny

|

Sure

|

2

|

|

Overcast

|

Sure

|

4

|

|

Rain

|

No

|

1

|

|

Rain

|

Sure

|

4

|

Calculating Gini Index

Now we’ll calculate the Gini Index when the cut up is predicated on Outlook.

Step 1: Gini Index of Whole Dataset S

So, the overall variety of cases in S is 14, and their distribution is:

The Gini Index of S might be:

P(Sure) = 9/14, P(No) = 5.14

Acquire(S)= 1-((9/14)^2 + (5/14)^2)

Acquire(S) = 1-(0.404_0.183) = 1- 0.587 = 0.413

Step 2: Gini Index for every subset based mostly on Outlook

Now, let’s break the information factors into subsets based mostly on the Outlook distribution, so:

Sunny(5 information: 2 Sure and three No):

P(Sure)=⅖, P(No) = ⅗

Gini(Sunny) = 1-((⅖)^2 +(⅗)^2) = 0.48

Overcast (4 information: 4 Sure, 0 No):

Since all cases on this subset are “Sure”, the Gini Index is:

Gini(Overcast) = 1-(4/4)^2 +(0/4)^2)= 1-1= 0

Rain (5 information: 4 Sure, 1 No):

P(Sure)=⅘, P(No)=⅕

Gini(Rain) = 1-((⅘ )^2 +⅕ )^2) = 0.32

Overcast (4 information: 4 Sure, 0 No):

Since all cases on this subset are “Sure”, the Gini Index is:

Gini(Overcast) = 1-(4/4)^2 +(0/4)^2)= 1-1= 0

Rain (5 information: 4 Sure, 1 No):

P(Sure)=⅘, P(No)=⅕

Gini(Rain) = 1-((⅘ )^2 +⅕ )^2) = 0.32

Step 3: Weighted Gini Index for Cut up

Now, we calculate the Weighted Gini Index for the cut up based mostly on Outlook. This would be the Gini Index for the complete dataset after the cut up.

Weighted Gini(S,Outlook)= 5/14 * Gini(Sunny) + 4/14 * Gini(Overcast) + 5/14 * (Gini(Rain)

Weighted Gini(S,Outlook)= 5/14 * 0.48+ 4/14 *0 + 5/14 * 0.32 = 0.286

Step 4: Gini Acquire

Gini Acquire might be calculated because the discount within the Gini Index after the cut up. So,

Gini Acquire(S,Outlook)=Gini(S)−Weighted Gini(S,Outlook)

Gini Acquire(S,Outlook) = 0.413 – 0.286 = 0.127

So, the Gini Acquire for the Outlook attribute is 0.127. Which means that through the use of Outlook as a splitting node, the impurity of the dataset could be lowered by 0.127. This means the effectiveness of this function in classifying the information.

How Does a Resolution Tree Work?

As mentioned, a call tree is a supervised machine studying algorithm that can be utilized for each regression and classification duties. A call tree begins with the collection of a root node utilizing one of many splitting standards – info acquire or gini index. So, constructing a call tree includes recursive splitting the coaching information till the likelihood of distinction of outcomes in every department turns into most. The choice tree algorithm proceeds top-down from the basis. Right here is the way it works:

- Begin with the Root Node with all coaching samples.

- Select the most effective attribute to separate the information. The most effective function for the cut up would be the one that offers essentially the most variety of pure youngster nodes(that means the place the information factors belong to the identical class). This may be measured both by info acquire or the Gini index.

- Splitting the information into small subsets in accordance with the chosen function with max info acquire or minimal Gini index, creating additional pure youngster nodes till the ultimate outcomes are homogenous or from the identical class.

- The ultimate step stops the tree from additional rising when the situation is met, often called the storing standards. It happens if or when:

- All the information within the node belongs to the identical class or is a pure node.

- No additional cut up stays.

- A most depth of the tree is reached.

- The minimal variety of nodes turns into the leaf and is labelled as the expected class/worth for a specific area or attribute.

Recursive Partitioning

This top-down course of is known as recursive partitioning. It is usually often called grasping algorithm, as at every step, the algorithm picks the most effective cut up based mostly on the present information. This method is environment friendly however doesn’t guarantee a generalized optimum tree.

For instance, consider a call tree for a espresso determination. The foundation node asks, “Time of Day?”; if it’s morning, it asks “Drained?”; if sure, it results in “Drink Espresso,” else to “No Espresso.” The same department exists for the afternoon. This illustrates how a tree makes sequential selections till reaching a closing reply.

For this instance, the tree begins with “Time of day?” on the root. Relying on the reply to this, the subsequent node might be “Are you drained?”. Lastly, the leaf provides the ultimate class or determination “Drink Espresso” or “No Espresso”.

Now, because the tree grows, every cut up goals to create a pure youngster node. If splits cease early (attributable to depth restrict or small pattern dimension), the leaf could also be impure, containing a mixture of courses; then its prediction stands out as the majority class in that leaf.

And if the tree grows very massive, we’ve got so as to add a depth restrict or pruning (that means eradicating the branches that aren’t vital) to forestall overfitting and to regulate tree dimension.

Benefits and downsides of determination timber

Resolution timber have many strengths that make them a preferred alternative in machine studying, though they’ve pitfalls. On this part, we’ll discuss among the biggest benefits and downsides of determination timber:

Benefits

- Straightforward to know and interpret: Resolution timber are very intuitive and could be visualized as circulate charts. As soon as a tree is constructed or accomplished, one can simply see which function results in which prediction. This makes a mannequin extra clear.

- Deal with each numerical and categorical information: Resolution timber deal with each categorical and numerical information by default. They don’t require any encoding strategies, which makes them much more versatile, that means we will feed combined information varieties with out in depth information preprocessing.

- Captures non-linear relations within the information: Resolution timber are also called they can analyze and perceive the complicated hidden patterns from information, to allow them to seize the non-linear relationships between enter options and goal variables.

- Quick and Scalable: Resolution timber take little or no time whereas coaching and might deal with datasets with cheap effectivity as they’re non-parametric.

- Minimal information preparation: Resolution timber don’t require function scaling as a result of they cut up on precise classes means there may be much less want to do this externally; many of the scaling is dealt with internally.

Disadvantages

- Overfitting: Because the tree grows deeper, a determination tree simply overfits on the coaching information. This implies the ultimate mannequin won’t be able to carry out properly as a result of lack of generalization on take a look at or unseen real-world information

- Instability: The effectivity of the choice tree relies on the node it chooses to separate the information to discover a pure node. However small adjustments within the coaching set or a mistaken determination whereas selecting the node can result in a really totally different tree. In consequence, the end result of the tree is unstable.

- Complexity will increase because the depth of the tree will increase: Deep timber with many ranges additionally require extra reminiscence and time to guage, together with the problem of overfitting, as mentioned.

Purposes of Resolution Timber

Resolution Timber are widespread in follow throughout the machine studying and information science fields attributable to their interpretability and adaptability. Listed here are some real-world examples:

- Advice Methods: A call tree can present suggestions to a person on an e-commerce or media web site by analyzing that person’s exercise and content material preferences based mostly on their habits. Primarily based on all of the patterns and splits in a tree, it is going to counsel explicit merchandise or content material that the person is probably going excited by. For instance, for a web-based retailer, a call tree can be utilized to categorise the product class of a person based mostly on their exercise on-line.

- Fraud Detection: Resolution timber are sometimes utilized in monetary fraud detection to kind suspicious transactions. On this case, the tree can cut up on issues like transaction quantity, transaction location, frequency of transactions, character traits and much more to categorise if the exercise is fraudulent.

- Advertising and Buyer Segmentation: The advertising and marketing groups of companies can use determination timber to section or set up clients. On this case, a call tree may very well be used to categorize if the client can be seemingly to answer a marketing campaign or in the event that they had been extra more likely to churn based mostly on historic patterns within the information.

These examples display the broad use case for determination timber, they can be utilized in each classification and regression duties in fields various from suggestion algorithms to advertising and marketing to engineering.

Login to proceed studying and luxuriate in expert-curated content material.