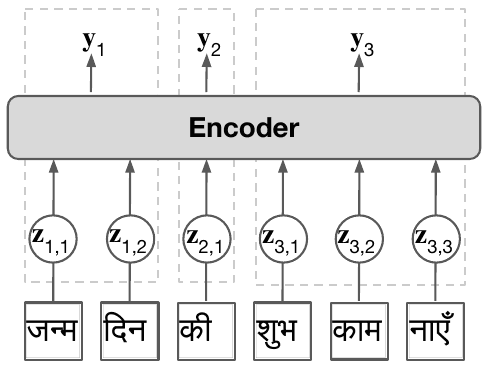

Subword tokenization requires balancing computational effectivity and vocabulary protection, which frequently results in suboptimal efficiency on languages and scripts not prioritized throughout coaching. We suggest to reinforce pretrained language fashions with a vocabulary-free encoder that generates enter embeddings from textual content rendered as pixels. By way of experiments on English-centric language fashions, we exhibit that our strategy considerably improves machine translation efficiency and facilitates efficient cross-lingual switch, outperforming tokenizer-based strategies. Moreover, we discover that pixel-based representations outperform byte-level approaches and commonplace vocabulary enlargement. Our strategy enhances the multilingual capabilities of monolingual language fashions with out in depth retraining and reduces decoding latency through enter compression.

- † College of Copenhagen

- ‡ Mohamed bin Zayed College of Synthetic Intelligence

- ** Work accomplished whereas at Apple