Apple researchers are advancing AI and ML via elementary analysis, and to help the broader analysis neighborhood and assist speed up progress on this subject, we share a lot of this analysis via publications and engagement at conferences. Subsequent week, the Worldwide Convention on Machine Studying (ICML) can be held in Vancouver, Canada, and Apple is proud to as soon as once more take part on this necessary occasion for the analysis neighborhood and to be an trade sponsor.

On the essential convention and related workshops, Apple researchers will current new analysis throughout a variety of matters in AI and ML, together with advances in pc imaginative and prescient, language fashions, diffusion fashions, reinforcement studying, and extra. A collection of the notable Apple ML analysis papers accepted at ICML are highlighted beneath, organized within the following sections:

ICML attendees will have the ability to expertise demonstrations of Apple’s ML analysis in our sales space #307 throughout exhibition hours. Apple can also be sponsoring and collaborating in a variety of affinity group-hosted occasions that help underrepresented teams within the ML neighborhood. A complete overview of Apple’s participation in and contributions to ICML 2025 could be discovered right here, and a collection of highlights papers that can be offered follows beneath.

Enhancing Simulation-Primarily based Inference

Many fields in science and engineering have come to depend on extremely advanced pc simulations to mannequin real-world phenomena that beforehand had been modeled with easier equations. These simulations enhance mannequin versatility and the power to copy and clarify advanced phenomena, however in addition they require new statistical inference strategies. Simulation-based inference (SBI) has emerged because the workhorse for inferring the parameters of those stochastic simulators. SBI algorithms use neural networks to be taught surrogate fashions of the chance, chance ratio, or posterior distribution, which can be utilized to extract confidence intervals over the parameters of curiosity given an commentary. Nonetheless, SBI has been proven to be unreliable when the simulator is misspecified.

In an oral presentation at ICML, Apple researchers will share work that addresses this problem: Addressing Misspecification in Simulation-based Inference via Information-driven Calibration. The paper describes sturdy posterior estimation (RoPE), a framework that overcomes mannequin misspecification with a small real-world calibration set of ground-truth parameter measurements. The work formalizes the misspecification hole as the answer of an optimum transport (OT) drawback between realized representations of real-world and simulated observations, permitting RoPE to be taught a mannequin of the misspecification with out inserting further assumptions on its nature.

Exploring Normalizing Flows for Picture Technology

Normalizing Flows (NFs) are likelihood-based fashions for steady inputs. This method has beforehand proven promising outcomes for density estimation and generative modeling, however NFs haven’t acquired a lot consideration for within the analysis neighborhood lately, as diffusion fashions and autoregressive approaches have grow to be the dominant paradigms for picture technology.

In an oral presentation at ICML, Apple researchers will share Normalizing Flows are Succesful Generative Fashions, which exhibits that NFs are extra highly effective than beforehand believed and can be utilized for high-quality picture technology. The paper describes TarFlow: a easy and scalable structure that permits extremely performant NF fashions. TarFlow is a transformer-based variant of Masked Autoregressive Flows (MAFs): it consists of a stack of autoregressive Transformer blocks on picture patches, alternating the autoregression path between layers. Leveraging a number of strategies to enhance pattern high quality, Tarflow units new state-of-the-art outcomes on chance estimation for pictures, beating the earlier greatest strategies by a big margin, and it could generate samples with high quality and variety akin to diffusion fashions – a primary for a standalone NF mannequin.

Advancing a Theoretical Understanding of Diffusion Fashions Composition

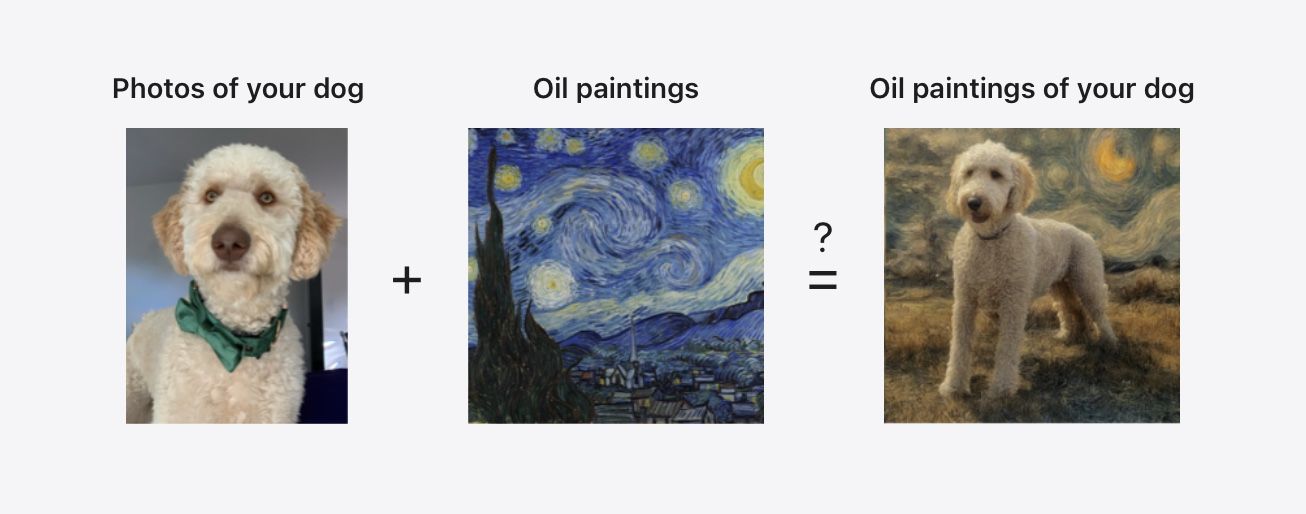

With the ability to construct novel compositions at inference time utilizing solely the outputs of pretrained fashions (both completely separate fashions, or completely different conditionings of a single mannequin) might allow generations which might be doubtlessly extra advanced than any mannequin might produce individually. For instance, think about one diffusion mannequin is skilled on photographs of your canine, and one other diffusion mannequin is skilled on a group of oil work; if the outputs of those fashions may very well be mixed, they may very well be used to generate oil work of your canine – even though these generations can be out-of-distribution (OOD) for each fashions. Prior empirical work has proven that this formidable imaginative and prescient is no less than partially achievable in apply, however how and why such compositions work has not theoretically defined.

At ICML, Apple researchers will current Mechanisms of Projective Composition of Diffusion Fashions, a brand new paper that research the theoretical foundations of composition in diffusion fashions, with a specific concentrate on out-of-distribution extrapolation and length-generalization. The work shares a exact definition of a desired results of composition – projective composition – and offers a theoretical rationalization for when and why composing distributions by way of linear rating mixture delivers the specified final result.

Scaling Legal guidelines for LLM Nice-Tuning

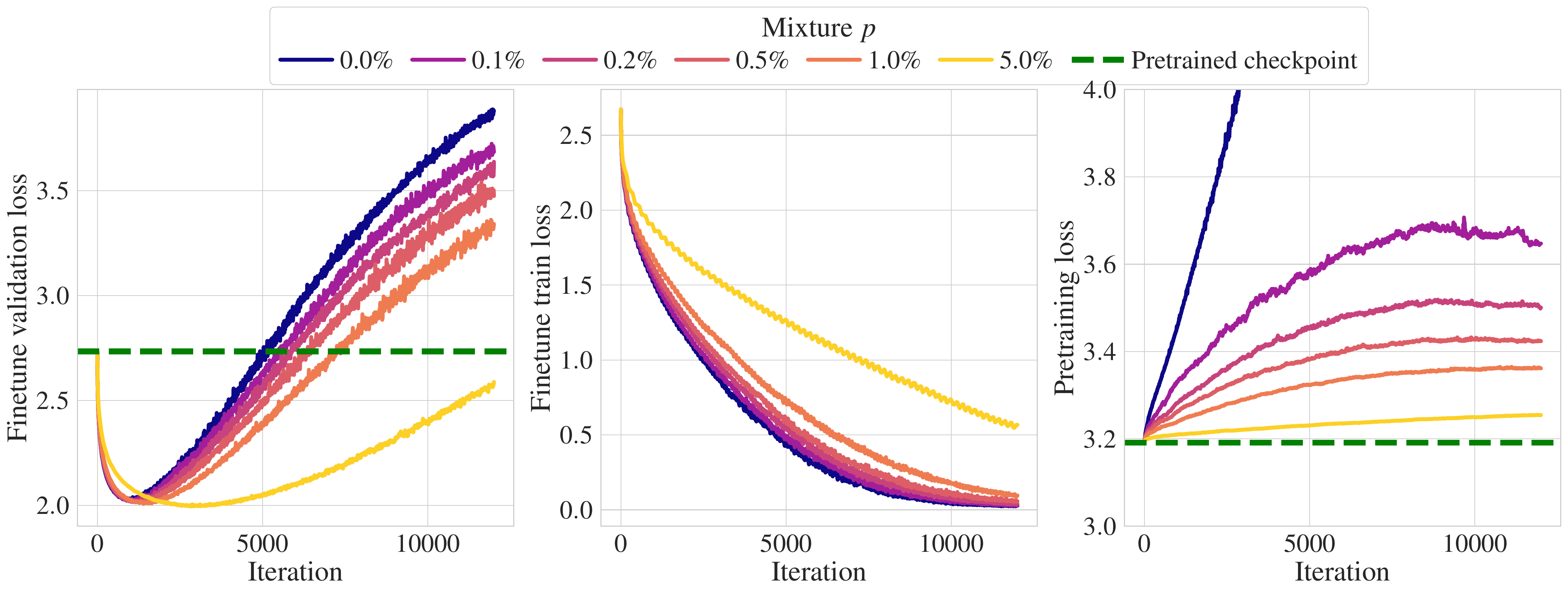

A typical method to get LLMs to carry out properly in a goal area is to fine-tune them by coaching them to do unsupervised next-token prediction on knowledge from that area. Nonetheless, fine-tuning presents two challenges: i) if the quantity of goal knowledge is proscribed (as is the case in most sensible functions), the mannequin will rapidly overfit, and ii) the mannequin will drift away from the unique mannequin and overlook the pre-training distribution.

At ICML, Apple researchers will current Scaling Legal guidelines for Forgetting Throughout Finetuning with Pretraining Information Injection, which quantifies these two phenomena for a number of goal domains, accessible goal knowledge, and mannequin scales. The work additionally measures the effectivity of blending pre-training and goal knowledge for fine-tuning to keep away from forgetting and mitigate overfitting. A key sensible discovering within the paper is that together with as little as 1% of pre-training knowledge within the fine-tuning knowledge combination shields a mannequin from forgetting the pre-training set.

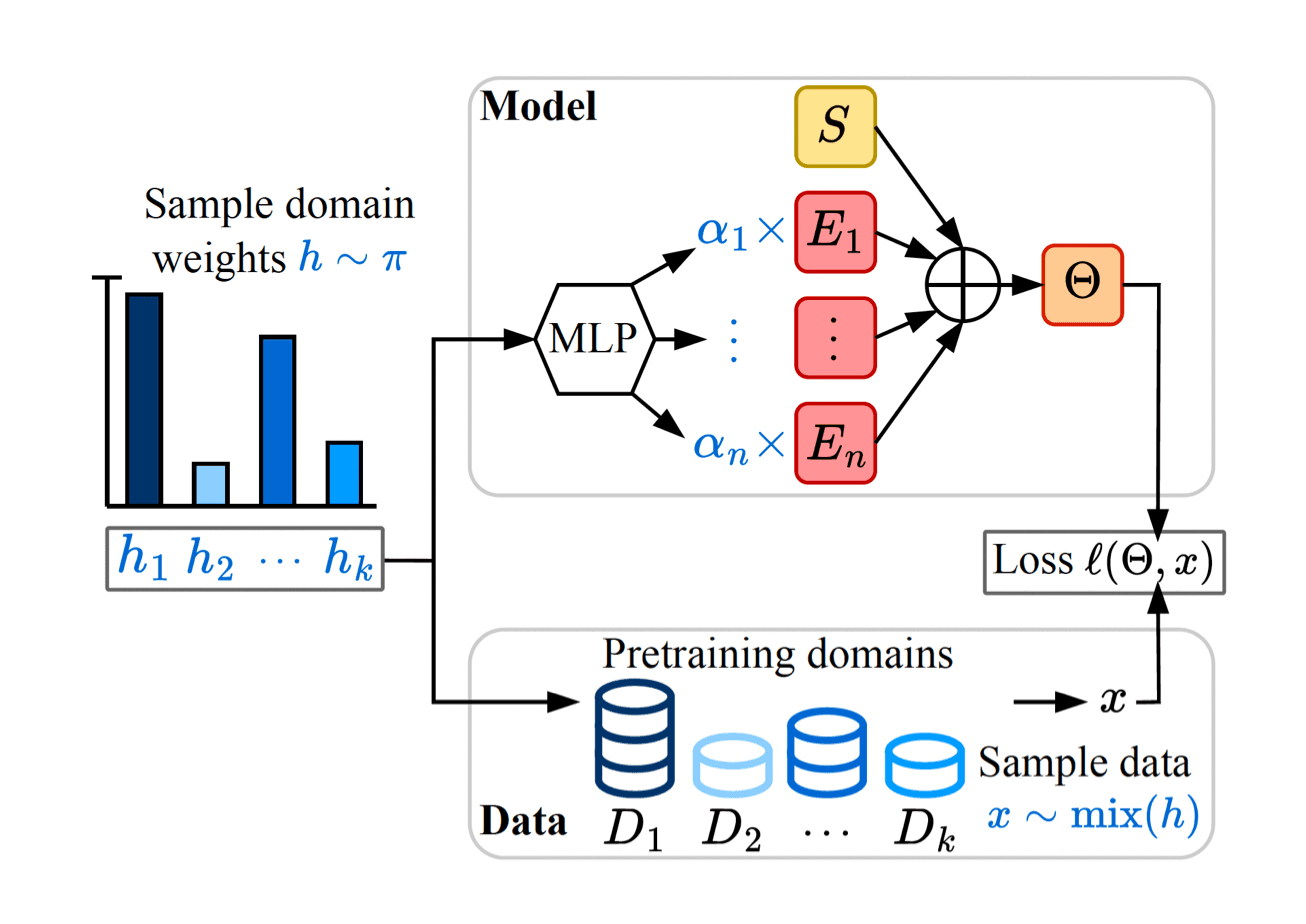

A Novel Structure for Pre-training Specialist Language Fashions

As a result of they’re smaller than LLMs, specialist language fashions are usually extra environment friendly and cheaper, however in lots of instances, the specialization knowledge – like an organization’s inner database, or knowledge on a distinct segment subject – could also be too scarce prepare high quality small mannequin by itself. Consequently, specialist fashions are generally skilled with massive pre-training datasets, by adjusting the combination of weights of the pre-training distribution to resemble the scarce specialist dataset. Nonetheless, this requires pre-training a full mannequin for every specialization dataset, which might grow to be impractical when the variety of specialised downstream duties, mannequin measurement, and knowledge scales.

At ICML, Apple researchers will current Soup-of-Specialists: Pretraining Specialist Fashions by way of Parameters Averaging, which addresses this problem. The paper particulars a novel structure that may instantiate a mannequin at take a look at time for any area weights with minimal computational price and with out re-training the mannequin. A pre-trained Soup-of-Specialists mannequin can immediately instantiate a mannequin tailor-made to any combination of area weights, making this method notably properly fitted to rapidly producing many alternative specialist fashions underneath measurement constraints.

Studying Autonomy from Self-Play Reinforcement Studying

Self-play has been an efficient technique for coaching reinforcement studying (RL) insurance policies for a lot of video games, robotic manipulation, and even bio-engineering. At ICML, Apple researchers will share Sturdy Autonomy Emerges from Self-Play, a analysis paper displaying that this method can be a surprisingly efficient technique for studying insurance policies for autonomous driving.

Utilizing GIGAFLOW, a batched simulator architected for self-play RL on an enormous scale, the researchers had been ready to make use of an unprecedented quantity of simulation knowledge – 1.6 billion kilometers of driving. The ensuing realized coverage achieves state-of-the-art efficiency on three unbiased autonomous driving benchmarks, and outperforms the prior state-of-the-art when examined on recorded real-world situations, amidst human drivers, regardless of having by no means seeing human knowledge throughout coaching. The coverage can also be sensible when assessed in opposition to human references and achieves unprecedented robustness, averaging 17.5 years of steady driving between incidents in simulation.

Demonstrating ML Analysis within the Apple Sales space

Throughout exhibition hours, ICML attendees will have the ability to work together with stay demos of Apple ML analysis in sales space #307, together with MLX, a versatile array framework that’s optimized for Apple silicon. MLX permits coaching and inference of arbitrarily advanced fashions on Apple silicon powered gadgets with nice brevity and adaptability. On the Apple sales space, researchers will showcase fine-tuning a 7B parameter LLM on an iPhone, picture technology utilizing a big diffusion mannequin on an iPad, and textual content technology utilizing a variety of LLMs on an M2 Extremely Mac Studio.

Supporting the ML Analysis Group

Apple is dedicated to supporting underrepresented teams within the ML neighborhood. We’re proud to once more sponsor a number of affinity teams internet hosting occasions onsite at ICML, together with LatinX in AI(workshop on July 14) and Ladies in Machine Studying (WiML)(workshop on July 16). Along with supporting these workshops with sponsorship, Apple staff will even be collaborating at every of those and different affinity occasions.

Be taught Extra about Apple ML Analysis at ICML 2025

ICML brings collectively the neighborhood of researchers advancing the state-of-the-art in ML, and Apple is proud to once more share revolutionary new analysis on the occasion and join with the neighborhood attending it. This put up highlights only a collection of the works Apple ML researchers will current at ICML 2025, and a complete overview and schedule of our participation could be discovered right here.