The push so as to add AI to customer support, which now we have been witnessing recently in virtually each sector, can generally come at a excessive value for safety. On December 22, 2025, the staff of moral hackers at Pen Check Companions (PTP) went public with a collection of flaws they discovered within the new AI chatbot for Eurostar.

On your data, Eurostar is the well-known high-speed rail operator that connects the UK to mainland Europe via the Channel Tunnel, carrying hundreds of thousands of travellers between main hubs like London, Paris, and Amsterdam.

How The Flaws Had been Found

What began as a researcher planning a easy practice journey from London become the invention of “weak guardrails” that left the system open to manipulation. On your data, guardrails are the digital “security brakes” that cease an AI from going off-topic or leaking secrets and techniques.

Based on PTP researchers, Eurostar’s bot had a serious design flaw; it solely checked the final message in a chat for security. By merely enhancing earlier messages within the dialog on their very own display screen, the researchers discovered they might trick the AI into ignoring its personal guidelines.

The technical facet of the “hack” was surprisingly easy. As soon as the protection checks have been bypassed, the researchers used immediate injection to make the bot reveal its inside directions and the kind of AI mannequin it was utilizing.

Additional probing revealed two different vital points. First, the chatbot was weak to HTML injection and could possibly be compelled to show malicious code or faux hyperlinks immediately within the consumer’s chat window. Secondly, dialog and message IDs weren’t verified.

This implies the system didn’t correctly examine if a chat session really belonged to the consumer, probably permitting an attacker to “replay” or inject malicious content material into another person’s dialog.

Fixing the Flaws

This analysis, which was shared with Hackread.com, reveals that discovering the vulnerabilities was really simpler than getting them fastened. The staff first alerted Eurostar on June 11, 2025, however there was no response. Lastly, after a month of chasing, they tracked down Eurostar’s Head of Safety on LinkedIn on July 7.

Researchers later realized that Eurostar had apparently outsourced their safety reporting course of proper when the bugs have been reported, main them to assert that they had “no report” of the warnings.

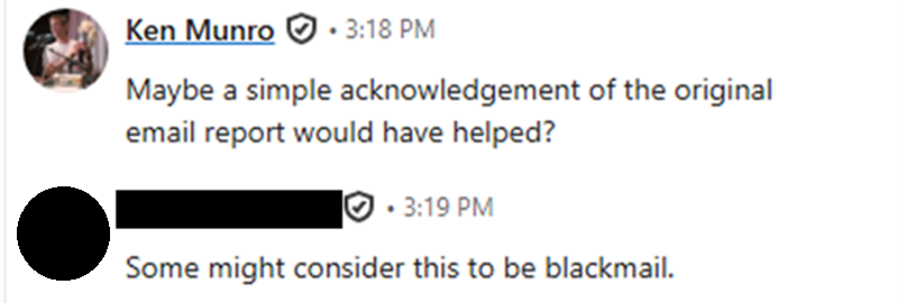

At one level, the rail operator even accused PTP’s safety staff of “blackmail” only for making an attempt to flag the problems. The accusation got here regardless of the corporate having a publicly accessible vulnerability disclosure program obtainable right here.

“We had disclosed a vulnerability in good religion,” the researchers famous, expressing their shock on the hostile response.

Whereas the issues have now been patched, the staff warned that this ought to be a wake-up name for large manufacturers. Simply because a software is AI-powered doesn’t imply the previous guidelines of net safety don’t apply, and if the backend isn’t stable, the flowery AI options are little greater than “theatre.”