Picture by Writer

# Introduction

Constructing your individual native AI hub provides you the liberty to automate duties, course of non-public information, and create customized assistants, all with out relying on the cloud or having to take care of month-to-month charges. On this article, I’ll stroll you thru constructing a self-hosted AI workflow hub on a house server, providing you with full management, higher privateness, and highly effective automation.

We’ll mix instruments like Docker for packaging software program, Ollama to run native machine studying fashions, n8n to create visible automations, and Portainer for straightforward administration. This setup is ideal for a reasonably highly effective x86-64 system like a mini-PC or an outdated desktop with no less than 8GB of RAM, which may capably deal with a number of companies directly.

# Why Construct a Native AI Hub?

Once you self-host your instruments, you progress from being a person of companies to an proprietor of infrastructure, and that’s highly effective. A neighborhood hub is non-public (your information by no means leaves your community), cost-effective (no utility programming interface (API) charges), and totally customizable.

The core of this hub is a strong group of things the place:

- Ollama serves as your non-public, on-device AI mind, working fashions for textual content technology and evaluation

- n8n acts because the nervous system, connecting Ollama to different apps (like calendars, electronic mail, or recordsdata) to construct automated workflows

- Docker is the muse, packaging every software into separate, easy-to-manage containers

// Core Parts of Your Self-Hosted AI Hub

| Device | Main Position | Key Profit for Your Hub |

|---|---|---|

| Docker/Portainer | Containerization & administration | Isolates apps, simplifies deployment, and supplies a visible administration dashboard |

| Ollama | Native massive language mannequin (LLM) server | Runs AI fashions domestically for privateness; supplies an API for different instruments to make use of |

| n8n | Workflow automation platform | Visually connects Ollama to different companies (APIs, databases, recordsdata) to create highly effective automations |

| Nginx Proxy Supervisor | Safe entry & routing | Supplies a safe net gateway to your companies with simple SSL certificates setup |

# Getting ready Your Server Basis

First, guarantee your server is prepared. We suggest a clear set up of Ubuntu Server LTS or an analogous Linux distribution. As soon as put in, hook up with your server by way of safe shell (SSH). The primary and most important step is putting in Docker, which can run all our subsequent instruments.

// Putting in Docker and Docker Compose

Run the next instructions in your terminal to put in Docker and Docker Compose. Docker Compose is a software that permits you to outline and handle multi-container functions with a easy YAML file.

sudo apt replace && sudo apt improve -y

sudo apt set up apt-transport-https ca-certificates curl software-properties-common -y

curl -fsSL https://obtain.docker.com/linux/ubuntu/gpg | sudo apt-key add -

sudo add-apt-repository "deb [arch=amd64] https://obtain.docker.com/linux/ubuntu $(lsb_release -cs) steady"

sudo apt replace

sudo apt set up docker-ce docker-ce-cli containerd.io docker-compose-plugin -y

// Verifying and Setting Permissions

Confirm the set up and add your person to the Docker group to run instructions with out sudo:

sudo docker model

sudo usermod -aG docker $USER

Output:

You will have to sign off after which log in once more for this to take impact.

// Managing with Portainer

As an alternative of utilizing solely the command line, we’ll deploy Portainer, a web-based graphical person interface (GUI) for managing Docker. Create a listing for it and a docker-compose.yml file with the next command.

mkdir -p ~/portainer && cd ~/portainer

nano docker-compose.yml

Paste the next configuration into the file. This tells Docker to obtain the Portainer picture, restart it mechanically, and expose its net interface on port 9000.

companies:

portainer:

picture: portainer/portainer-ce:newest

container_name: portainer

restart: unless-stopped

ports:

- "9000:9000"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- portainer_data:/information

volumes:

portainer_data:

Save the file (Ctrl+X, then Y, then Enter). Now, deploy Portainer:

Your output ought to appear to be this:

Now navigate to http://YOUR_SERVER_IP:9000 in your browser. For me, it’s http://localhost:9000

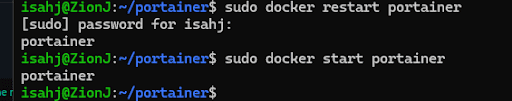

You might must restart the server. You are able to do that with the next command:

sudo docker begin portainer

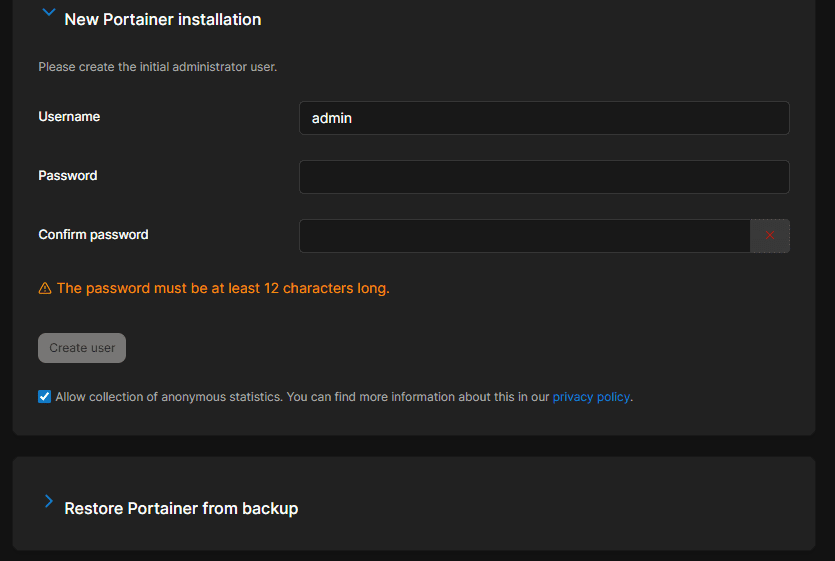

Create an admin account:

And you will note the Portainer dashboard after creating an account.

That is your mission management for all different containers. You can begin, cease, view logs, and handle each different service from right here.

# Putting in Ollama: Your Native AI Engine

Ollama is a software designed to simply run open-source massive language fashions (LLMs) like Llama 3.2 or Mistral domestically. It supplies a easy API that n8n and different apps can use.

// Deploying Ollama with Docker

Whereas Ollama will be put in immediately, utilizing Docker ensures consistency. Create a brand new listing and a docker-compose.yml file for it with the next command.

mkdir -p ~/ollama && cd ~/ollama

nano docker-compose.yml

Use this configuration. The volumes line is vital as a result of it shops your downloaded machine studying fashions persistently, so you do not lose them if the container restarts.

companies:

ollama:

picture: ollama/ollama:newest

container_name: ollama

restart: unless-stopped

ports:

- "11434:11434"

volumes:

- ollama_data:/root/.ollama

volumes:

ollama_data:

Deploy it: docker compose up -d

// Pulling and Working Your First Mannequin

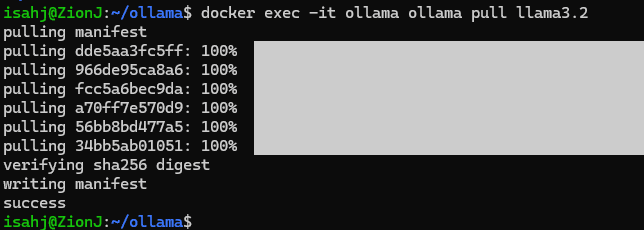

As soon as the container is working, you possibly can pull a mannequin. Let’s begin with a succesful however environment friendly mannequin like Llama 3.2.

This command executes ollama pull llama3.2 contained in the working container:

docker exec -it ollama ollama pull llama3.2

Activity Demonstration: Querying Ollama

Now you can work together together with your native AI immediately. The next command sends a immediate to the mannequin working contained in the container.

docker exec -it ollama ollama run llama3.2 "Write a brief haiku about expertise."

It is best to see a generated poem in your terminal. Extra importantly, Ollama’s API is now obtainable at http://YOUR_SERVER_IP:11434 for n8n to make use of.

# Integrating n8n for Clever Automation

n8n is a visible workflow automation software. You possibly can drag and drop nodes to create sequences; for instance, “Once I save a doc, summarize it with Ollama, then ship the abstract to my notes app.”

// Deploying n8n with Docker

Create a listing for n8n. We’ll use a Compose file that features a database for n8n to avoid wasting your workflows and execution information.

mkdir -p ~/n8n && cd ~/n8n

nano docker-compose.yml

Now paste the next contained in the YAML file:

companies:

n8n:

picture: n8nio/n8n:newest

container_name: n8n

restart: unless-stopped

ports:

- "5678:5678"

setting:

- N8N_PROTOCOL=http

- WEBHOOK_URL=http://YOUR_SERVER_IP:5678/

- N8N_ENCRYPTION_KEY=your_secure_encryption_key_here

- DB_TYPE=postgresdb

- DB_POSTGRESDB_HOST=db

- DB_POSTGRESDB_PORT=5432

- DB_POSTGRESDB_DATABASE=n8n

- DB_POSTGRESDB_USER=n8n

- DB_POSTGRESDB_PASSWORD=your_secure_db_password

volumes:

- n8n_data:/residence/node/.n8n

depends_on:

- db

db:

picture: postgres:17-alpine

container_name: n8n_db

restart: unless-stopped

setting:

- POSTGRES_USER=n8n

- POSTGRES_PASSWORD=your_secure_db_password

- POSTGRES_DB=n8n

volumes:

- postgres_data:/var/lib/postgresql/information

volumes:

n8n_data:

postgres_data:

Exchange YOUR_SERVER_IP and the placeholder passwords. Deploy with docker compose up -d. Entry n8n at http://YOUR_SERVER_IP:5678.

Activity Demonstration: Constructing Your First AI Workflow

Let’s create a easy workflow the place n8n makes use of Ollama to behave as a inventive writing assistant.

- Within the n8n editor, add a “Schedule Set off” node and set it to run manually for testing

- Add an “HTTP Request” node. Configure it to name your Ollama API:

- Technique: POST

- URL: http://ollama:11434/api/generate

- Set Physique Content material Sort to JSON

- Within the JSON physique, enter: {“mannequin”: “llama3.2”, “immediate”: “Generate three concepts for a sci-fi brief story.”}

- Add a “Set” node to extract simply the textual content from Ollama’s JSON response. Set the worth to

{{ $json["response"] }} - Add a “Code” node and use a easy line like

objects = [{"json": {"story_ideas": $input.item.json}}]; return objects;to format the information - Lastly, join an “E mail Ship” node (configured together with your electronic mail service) or a “Save to File” node to output the outcomes

Click on “Execute Workflow.” n8n will ship the immediate to your native Ollama container, obtain the concepts, and course of them. You have simply constructed a personal, automated AI assistant.

# Securing Your Hub with Nginx Proxy Supervisor

You now have companies on totally different ports (Portainer: 9000, n8n: 5678). Nginx Proxy Supervisor (NPM) enables you to entry them by way of neat subdomains (like portainer.residence.web) with free safe sockets layer (SSL) encryption from Let’s Encrypt.

// Deploying Nginx Proxy Supervisor

Create a closing listing for NPM.

mkdir -p ~/npm && cd ~/npm

nano docker-compose.yml

Paste the next code in your YAML file:

companies:

app:

picture: 'jc21/nginx-proxy-manager:newest'

container_name: nginx-proxy-manager

restart: unless-stopped

ports:

- '80:80'

- '443:443'

- '81:81'

volumes:

- ./information:/information

- ./letsencrypt:/and so forth/letsencrypt

volumes:

information:

letsencrypt:

Deploy with docker compose up -d.

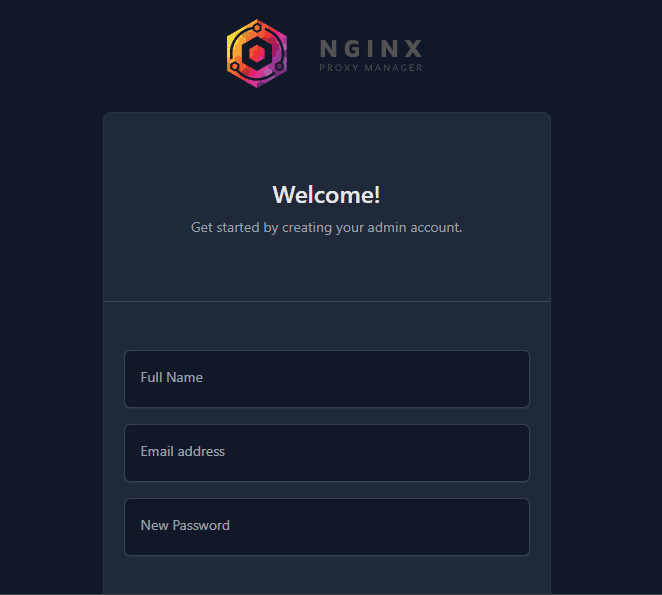

The admin panel is at http://YOUR_SERVER_IP:81. Log in with the default credentials (admin@instance.com / changeme) and alter them instantly.

Activity Demonstration: Securing n8n Entry

- In your house router, ahead ports 80 and 443 to your server’s inner web protocol (IP) tackle. That is the one port forwarding required

- In NPM’s admin panel (your-server-ip:81), go to Hosts -> Proxy Hosts -> Add Proxy Host

- For n8n, fill within the particulars:

- Area: n8n.yourdomain.com (or a subdomain you personal pointing to your private home IP)

- Scheme: http

- Ahead Hostname / IP: n8n (Docker’s inner community resolves the container title!)

- Ahead Port: 5678

- Click on SSL and request a Let’s Encrypt certificates, forcing SSL

Now you can securely entry n8n at https://n8n.yourdomain.com. Repeat for Portainer (portainer.yourdomain.com forwarding to portainer:9000).

# Conclusion

You now have a totally functioning, non-public AI automation hub. Your subsequent steps could possibly be:

- Increasing Ollama: Experiment with totally different fashions like Mistral for pace or codellama for programming duties

- Superior n8n Workflows: Join your hub to exterior APIs (Google Calendar, Telegram, RSS feeds) or inner companies (like an area file server)

- Monitoring: Add a software like Uptime Kuma (additionally deployable by way of Docker) to observe the standing of all of your companies

This setup turns your modest {hardware} into a strong, non-public digital mind. You management the software program, personal the information, and pay no ongoing charges. The abilities you’ve got realized managing containers, orchestrating companies, and automating with AI are the muse of recent, impartial tech infrastructure.

// Additional Studying

Shittu Olumide is a software program engineer and technical author captivated with leveraging cutting-edge applied sciences to craft compelling narratives, with a eager eye for element and a knack for simplifying complicated ideas. You can too discover Shittu on Twitter.