Researchers from the College of Georgia and Massachusetts Basic Hospital (MGH) have developed a specialised language mannequin, RadiologyLlama-70B, to research and generate radiology reviews. Constructed on Llama 3-70B, the mannequin is educated on in depth medical datasets and delivers spectacular efficiency in processing radiological findings.

Context and significance

Radiological research are a cornerstone of illness prognosis, however the rising quantity of imaging information locations vital pressure on radiologists. AI has the potential to alleviate this burden, bettering each effectivity and diagnostic accuracy. RadiologyLlama-70B marks a key step towards integrating AI into scientific workflows, enabling the streamlined evaluation and interpretation of radiological reviews.

Coaching information and preparation

The mannequin was educated on a database containing over 6.5 million affected person medical reviews from MGH, masking the years 2008–2018. In keeping with the researchers, these complete reviews span a wide range of imaging modalities and anatomical areas, together with CT scans, MRIs, X-rays, and Fluoroscopic imaging.

The dataset contains:

- Detailed radiologist observations (findings)

- Remaining impressions

- Research codes indicating imaging methods akin to CT, MRI, and X-rays

After thorough preprocessing and de-identification, the ultimate coaching set consisted of 4,354,321 reviews, with a further 2,114 reviews put aside for testing. Rigorous cleansing strategies have been utilized, akin to eradicating incorrect data, to cut back the probability of “hallucinations” (incorrect outputs).

Technical highlights

The mannequin was educated utilizing two approaches:

- Full fine-tuning: Adjusting all mannequin parameters.

- QLoRA: A low-rank adaptation methodology with 4-bit quantization, making computation extra environment friendly.

Coaching infrastructure

The coaching course of leveraged a cluster of 8 NVIDIA H100 GPUs and included:

- Combined-precision coaching (BF16)

- Gradient checkpointing for reminiscence optimization

- DeepSpeed ZeRO Stage 3 for distributed studying

Efficiency outcomes

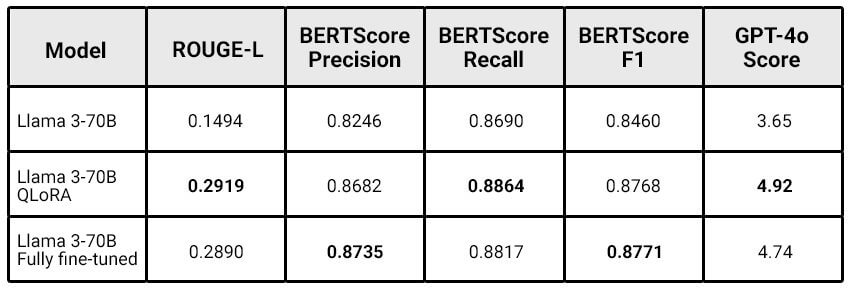

RadiologyLlama-70B considerably outperformed its base mannequin (Llama 3-70B):

QLoRA proved extremely environment friendly, delivering comparable outcomes to full fine-tuning at decrease computational prices. The researchers famous: “The bigger the mannequin is, the extra advantages QLoRA fine-tune can get hold of.”

Limitations

The research acknowledges some challenges:

- No direct comparability with earlier fashions like

Radiology-llama2. - The most recent Llama 3.1 variations weren’t used.

- The mannequin can nonetheless exhibit “hallucinations,” making it unsuitable for absolutely autonomous report technology.

Future instructions

The analysis workforce plans to:

- Prepare the mannequin on Llama 3.1-70B and discover variations with 405B parameters.

- Refine information preprocessing utilizing language fashions.

- Develop instruments to detect “hallucinations” in generated reviews.

- Increase analysis metrics to incorporate clinically related standards.

Conclusion

RadiologyLlama-70B represents a major development in making use of AI to radiology. Whereas not prepared for absolutely autonomous use, the mannequin reveals nice potential to reinforce radiologists’ workflows, delivering extra correct and related findings. The research highlights the potential of approaches like QLoRA to coach specialised fashions for medical functions, paving the best way for additional improvements in healthcare AI.

For extra particulars, take a look at the complete research on arXiv.