Mass AI-generated content material has overwhelmed our social feeds, and that has set a way of panic amongst PR leaders. Model communications have been difficult earlier, however this has been exasperated even additional by AI content material. Audiences have been left confused, whether or not or not it’s not having the ability to differentiate between actual and pretend personalities on-line or faux statements being circulated to mislead audiences. This has stored management questioning how they’ll lower by and influence their goal audiences who’re, for the time being, overstimulated with AI-driven content material.

We analysed 30M information factors between 1st January 2025 – twelfth August 2025 globally on social and mainstream media like X, Boards, On-line information, LinkedIn, TikTok, Instagram and Fb. We checked out actual world circumstances of how audiences have been misled to imagine one thing is actual, and the way this began a domino impact on the content material being consumed by the world immediately.

The rise of artificial content material outranks fact-checkers by a mile

Artificial media has taken over the web and viewers feeds have been flooded with unrelated and unreliable AI content material. What’s essential to notice is that, with mass AI content material, unreliability can result in getting right into a harmful spiral of consuming nonsensical content material that doesn’t profit anyone. Consequently, audiences have began to defend the content material they see by desirous to solely subscribe to what conforms with their expectations. With a decline within the variety of fact-checkers, misinformation and disinformation have develop into rampant.

An actual phrase case was when within the US a squirrel named Peanut “P-Nut” was given euthanasia for being illegally stored by a US citizen, a faux assertion by President Donald Trump, in disagreement with the euthanasia by the authorities, was extensively circulated on X. Audiences sympathised with this assertion and stood by Trump, going so far as gathering assist to make him the subsequent President. This was a snowball impact, and the explanation this occurred was as a result of the audiences had a public determine assist what they have been already considering and Trump’s assertion conformed with their expectations. Though the assertion didn’t have any AI-involvement, it has develop into a working example to grasp how audiences understand AI content material. In the event that they like what they see, whether or not it’s human or AI, not a second is spared to verify its authenticity.

Digital influencers are creeping in on social media feeds

Mia Zelu, a digital influencer, grew in style throughout this yr’s Wimbledon. On her Instagram, she had uploaded a photograph carousel that appeared like she was bodily there on the All England Membership having fun with a drink. At first, she appeared actual, however the media and audiences rapidly questioned it. She posted the pictures in July of this yr throughout the match with a caption, however was fast to disable her remark part including to the thriller and debate. There was a number of on-line backlash with audiences clearly annoyed with how simply deceiving this might be. Regardless of the backlash, her follower rely grew and her account now has about 168k followers.

The conversations round AI influencers is just simply starting and raises severe questions on authenticity, digital consumption and the way AI personas can really have an effect on viewers perceptions – with out really present.

AI means manufacturers are working in an area of lowered belief

In response to the AI Advertising and marketing Benchmark Report 2024, the belief deficit instantly impacts model communications methods, as 36.7% of entrepreneurs fear concerning the authenticity of AI-driven content material, whereas 71% of shoppers admit they battle to belief what they see or hear due to AI.

Audiences should not rejecting AI outright, however the opacity round it might be harmful, making their confidence in AI as a software shaky. That is the place PR leaders must make genuine communication a necessity and never only a “nice-to-have”. In occasions the place audiences are doubting whether or not a message was written by a human or a machine, the worth of real and honest human-driven storytelling rises.

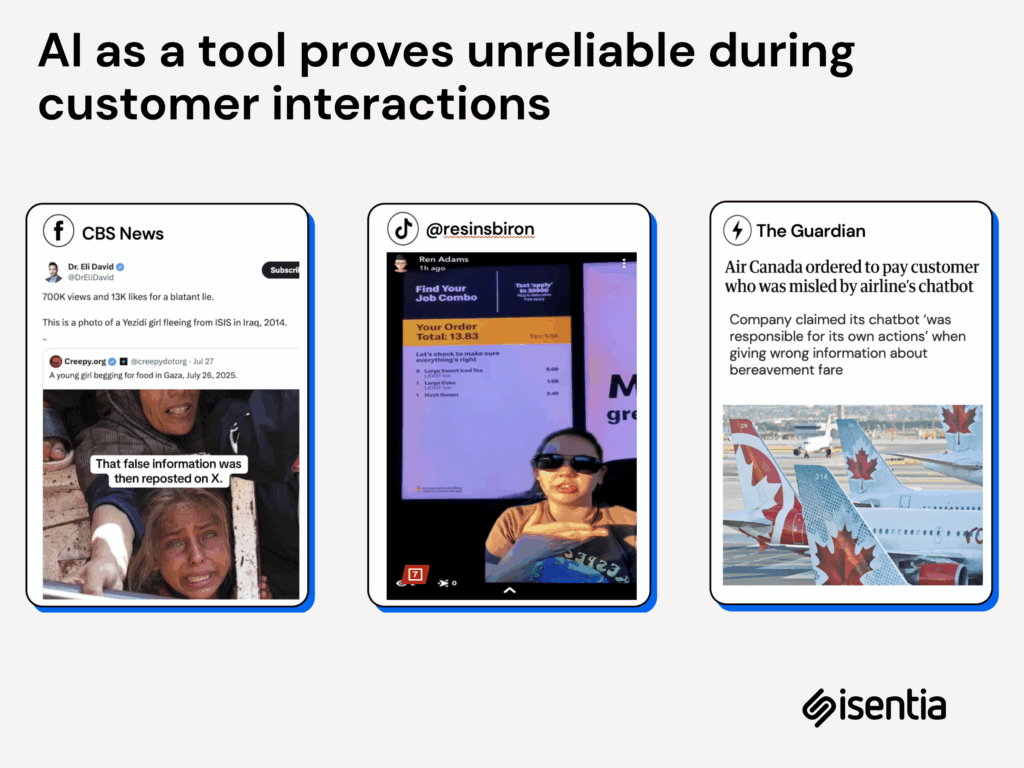

Actual-world situations the place AI as a software misses

Scepticism in direction of AI doesn’t simply come from high-profile controversies. It reveals up in small, on a regular basis moments that frustrate audiences and remind them how fragile belief could be.

- Grok’s picture blunder: A extensively shared photograph of a younger lady begging for meals in Gaza was wrongly tagged by Grok as being from Iraq in 2004. The error unfold rapidly throughout platforms, fuelling anger about misinformation and elevating questions concerning the reliability of AI instruments.

- McDonald’s drive-thru glitch: A buyer within the US posted a TikTok displaying how AI at a drive-thru added 9 further candy teas to her order. The error attributable to crosstalk from one other lane, may appear trivial, however it highlights how automation can fail at easy duties and the way simply these failures go viral when shared on-line.

- Air Canada chatbot case: A buyer in search of details about bereavement fares was misled by the airline’s chatbot. When the corporate was requested to clarify this, they claimed the chatbot was “chargeable for its personal actions.” A Canadian tribunal then, rejected this defence and ordered Air Canada to compensate the passenger. The incident drew widespread protection, reinforcing public issues that companies are over-relying on AI with out accountability.

Audiences count on to eat “extra actual and fewer faux”

The November 2024 Coca-Cola vacation marketing campaign controversy exemplifies how rapidly AI-generated content material can set off shopper backlash. When Coca-Cola used AI to create three vacation commercials, the response was overwhelmingly damaging, with each shoppers and inventive professionals condemning the corporate’s determination to not make use of human artists. Regardless of Coca-Cola’s protection that they continue to be devoted in creating work that includes each human creativity and know-how, the incident highlighted how AI utilization in artistic content material could be perceived as a betrayal of name authenticity, significantly devastating for a corporation whose vacation campaigns have traditionally celebrated human connection and nostalgia.

This type of response to a multinational firm actually units the report straight round what audiences count on to eat. PR leaders and entrepreneurs must tread rigorously when creating content material, ensuring there’s no over-dependence on AI and that’s apparent for anybody to level out there isn’t a human creativity. Authenticity is in disaster solely once we let go of our management round AI. This mandates a necessity for extra fact-checkers and extra audits round manufacturers and management.

All for studying how Isentia can assist? Fill in your particulars beneath to entry the total Authenticity Report 2025 that uncovers cues for measuring model and stakeholder authenticity.

hbspt.varieties.create({

portalId: “458431”,

formId: “f6eba6a2-1445-433b-bc25-84f642ab0054”,

area: “na1”,

sfdcCampaignId: “701Q200000PtrqAIAR”

});

The submit AI has saturated viewers information and social feeds appeared first on Isentia.