A brand new paper from researchers in China and Spain finds that even superior multimodal AI fashions similar to GPT-4.1 wrestle to inform the time from photos of analog clocks. Small visible modifications within the clocks could cause main interpretation errors, and fine-tuning solely helps with acquainted examples. The outcomes increase issues concerning the reliability of those fashions when confronted with unfamiliar photos in real-world duties.

When people develop a deep sufficient understanding of a site, similar to gravity or different fundamental bodily rules, we transfer past particular examples to understand the underlying abstractions. This enables us to use that data creatively throughout contexts and to acknowledge new cases, even these we’ve got by no means seen earlier than, by figuring out the precept in motion.

When a site carries sufficient significance, we could even start to understand it the place it doesn’t exist, as with pareidolia, pushed by the excessive value of failing to acknowledge an actual occasion. So sturdy is that this pattern-recognizing survival mechanism that it even disposes us to discover a wider vary of patterns the place there are none.

The sooner and extra repetitively a site is instilled in us, the deeper its grounding and lifelong persistence; and one of many earliest visible datasets that we’re uncovered to as kids comes within the type of teaching-clocks, the place printed materials or interactive analog clocks are used to show us how you can inform time:

Instructing aids to assist kids study to inform time. Supply: https://www.youtube.com/watch?v=IBBQXBhSNUs

Although altering fashions in watch design could generally problem us, the resilience of this early domain-mastery is sort of spectacular, permitting us to discern analogue clock faces even within the face of advanced or ‘eccentric’ design selections:

Some difficult faces in watch couture. Supply: https://www.ablogtowatch.com/wait-a-minute-legibility-is-the-most-important-part-of-watch-design/

People don’t want 1000’s of examples to learn the way clocks work; as soon as the fundamental idea is grasped, we will acknowledge it in virtually any kind, even when distorted or abstracted.

The problem that AI fashions face with this activity, against this, highlights a deeper difficulty: their obvious energy could rely extra on high-volume publicity than on understanding.

Past the Imitation Sport?

The strain between surface-level efficiency and real ‘understanding’ has surfaced repeatedly in latest investigations of huge fashions. Final month Zhejiang College and Westlake College re-framed the query in a paper titled Do PhD-level LLMs Actually Grasp Elementary Addition? (not the main target of this text), concluding:

‘Regardless of spectacular benchmarks, fashions present essential reliance on sample matching fairly than true understanding, evidenced by failures with symbolic representations and violations of fundamental properties.

‘Specific rule provision impairing efficiency suggests inherent architectural constraints. These insights reveal analysis gaps and spotlight the necessity for architectures able to real mathematical reasoning past sample recognition.’

This week the query arises once more, now in a collaboration between Nanjing College of Aeronautics and Astronautics and the Universidad Politécnica de Madrid in Spain. Titled Have Multimodal Massive Language Fashions (MLLMs) Actually Realized to Inform the Time on Analog Clocks?, the new paper explores how effectively multimodal fashions perceive time-telling.

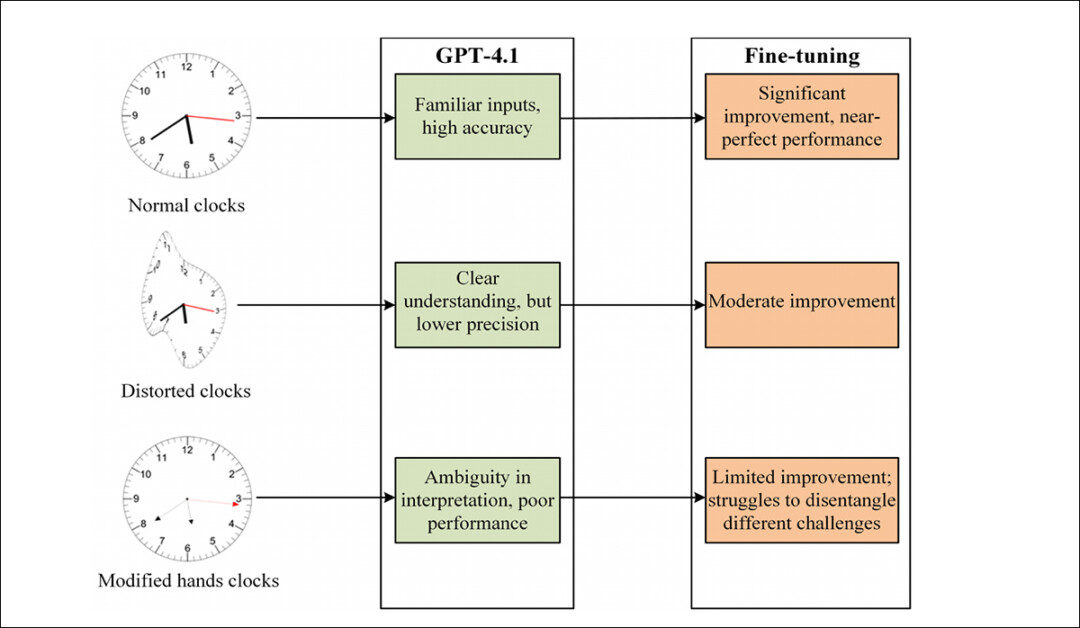

Although the progress of the analysis is roofed solely in broad element within the paper, the researchers’ preliminary assessments established that OpenAI’s GPT-4.1 multimodal language mannequin struggled to accurately learn the time from a various set of clock photos, typically giving incorrect solutions even on easy circumstances.

This factors to a potential hole within the mannequin’s coaching knowledge, elevating the necessity for a extra balanced dataset, to check whether or not the mannequin can truly study the underlying idea. Subsequently the authors curated an artificial dataset of analog clocks, evenly protecting each potential time, and avoiding the same old biases present in web photos:

An instance from the researchers’ artificial analog clock dataset, used to fine-tune a GPT mannequin within the new work. Supply: https://huggingface.co/datasets/migonsa/analog_watches_finetune

Earlier than fine-tuning on the brand new dataset, GPT-4.1 constantly did not learn these clocks. After some publicity to the brand new assortment, nonetheless, its efficiency improved – however solely when the brand new photos seemed like ones it had already seen.

When the form of the clock or the model of the palms modified, accuracy fell sharply; even small tweaks, similar to thinner palms or arrowheads (rightmost picture under), have been sufficient to throw it off; and GPT-4.1 struggled moreover to interpret Dali-esque ‘melting clocks’:

Clock photos with customary design (left), distorted form (center), and modified palms (proper), alongside the instances returned by GPT-4.1 earlier than and after fine-tuning. Supply: https://arxiv.org/pdf/2505.10862

The authors deduce that present fashions similar to GPT-4.1 could subsequently be studying clock-reading primarily by visible sample matching, fairly than any deeper idea of time, asserting:

‘[GPT 4.1] fails when the clock is deformed or when the palms are modified to be thinner and to have an arrowhead. The Imply Absolute Error (MAE) within the time estimate over 150 random instances was 232.48s for the preliminary clocks, 1380.69s when the form is deformed and 3726.93s when palms are modified.

‘These outcomes counsel that the MLLM has not discovered to inform the time however fairly memorized patterns.’

Sufficient Time

Most coaching datasets depend on scraped internet photos, which are inclined to repeat sure instances – particularly 10:10, a in style setting in watch ads:

From the brand new paper, an instance of the prevalence of the ‘ten previous ten’ time in analog clock photos.

Because of this restricted vary of instances depicted, the mannequin might even see solely a slim vary of potential clock configurations, limiting its skill to generalize past these repetitive patterns.

Concerning why fashions fail to accurately interpret the distorted clocks, the paper states:

‘Though GPT-4.1 performs exceptionally effectively with customary clock photos, it’s shocking that modifying the clock palms by making them thinner and including arrowheads results in a big drop in its accuracy.

‘Intuitively, one would possibly count on that the extra visually advanced change – a distorted dial –would have a higher influence on efficiency, but this modification appears to have a comparatively smaller impact.

‘This raises a query: how do MLLMs interpret clocks, and why do they fail? One chance is that thinner palms impair the mannequin’s skill to understand path, weakening its understanding of spatial orientation.

‘Alternatively, there might be different components that trigger confusion when the mannequin makes an attempt to mix the hour, minute, and second palms into an correct time studying.’

The authors contend that figuring out the basis trigger of those failures is essential to advancing multimodal fashions: if the difficulty lies in how the mannequin perceives spatial path, fine-tuning could supply a easy repair; but when the issue stems from a broader problem in integrating a number of visible cues, it factors to a extra elementary weak point in how these programs course of info.

Wonderful-Tuning Assessments

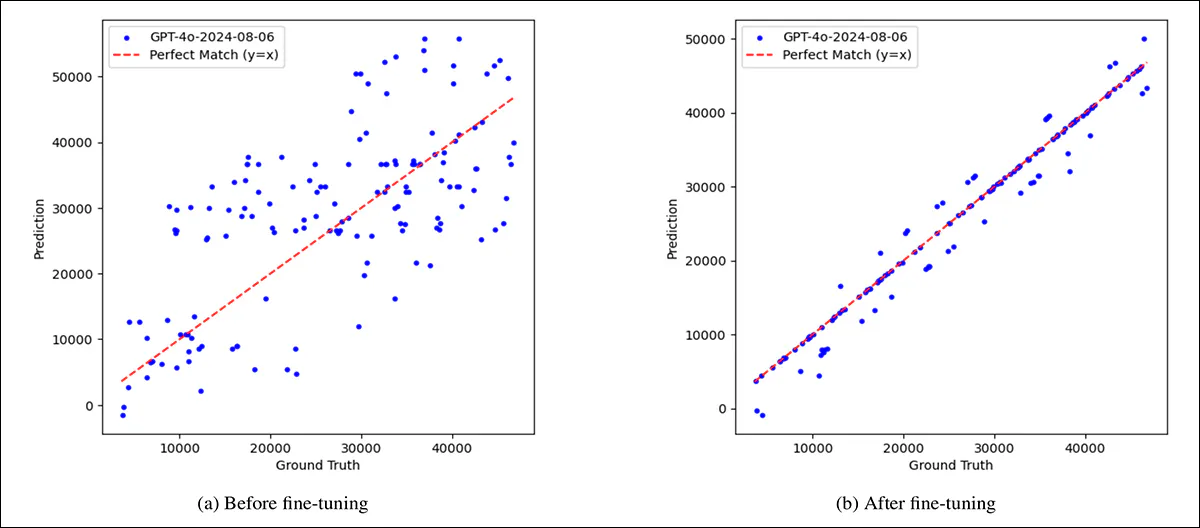

To check whether or not the mannequin’s failures might be overcome with publicity, GPT-4.1 was fine-tuned on the aforementioned and complete artificial dataset. Earlier than fine-tuning, its predictions have been broadly scattered, with vital errors throughout all sorts of clocks. After fine-tuning on the gathering, accuracy improved sharply on customary clock faces, and, to a lesser extent, on distorted ones.

Nevertheless, clocks with modified palms, similar to thinner shapes or arrowheads, continued to supply giant errors.

Two distinct failure modes emerged: on regular and distorted clocks, the mannequin usually misjudged the path of the palms; however on clocks with altered hand types, it typically confused the perform of every hand, mistaking hour for minute or minute for second.

A comparability illustrating the mannequin’s preliminary weak point, and the partial positive factors achieved by fine-tuning, exhibiting predicted vs. precise time, in seconds, for 150 randomly chosen clocks. On the left, earlier than fine-tuning, GPT-4.1’s predictions are scattered and infrequently removed from the proper values, indicated by the pink diagonal line. On the appropriate, after fine-tuning on a balanced artificial dataset, the predictions align far more intently with the bottom reality, though some errors stay.

This means that the mannequin had discovered to affiliate visible options like hand thickness with particular roles, and struggled when these cues modified.

The restricted enchancment on unfamiliar designs raises additional doubts about whether or not a mannequin of this type learns the summary idea of time-telling, or merely refines its pattern-matching.

Hand Indicators

So, though fine-tuning improved GPT-4.1’s efficiency on standard analog clocks, it had far much less influence on clocks with thinner palms or arrowhead shapes, elevating the likelihood that the mannequin’s failures stemmed much less from summary reasoning and extra from confusion over which hand was which.

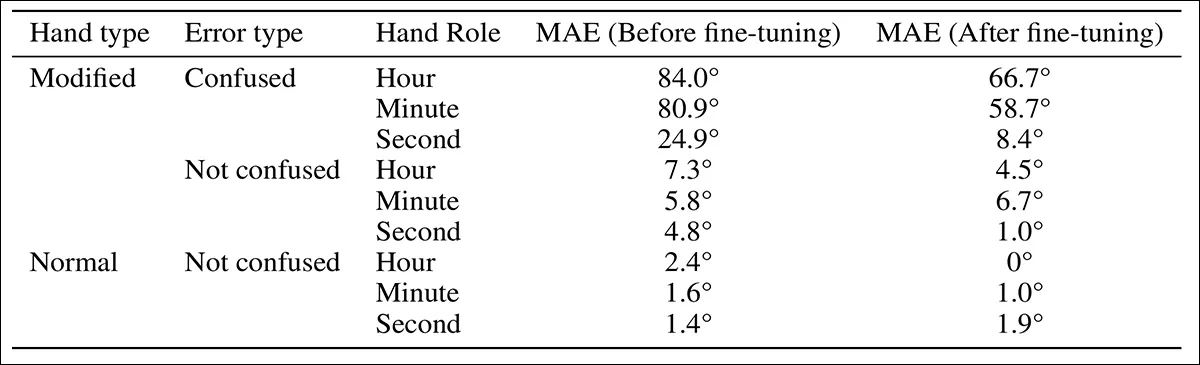

To check whether or not accuracy would possibly enhance if that confusion have been eliminated, a brand new evaluation was performed on the mannequin’s predictions for the ‘modified-hand’ dataset. The outputs have been divided into two teams: circumstances the place GPT-4.1 accurately acknowledged the hour, minute, and second palms; and circumstances the place it didn’t.

The predictions have been evaluated for Imply Absolute Error (MAE) earlier than and after fine-tuning, and the outcomes in comparison with these from customary clocks; angular error was additionally measured for every hand utilizing dial place as a baseline:

Error comparability for clocks with and with out hand-role confusion within the modified-hand dataset earlier than and after fine-tuning.

Complicated the roles of the clock palms led to the most important errors. When GPT-4.1 mistook the hour hand for the minute hand or vice versa, the ensuing time estimates have been typically far off. In distinction, errors attributable to misjudging the path of a accurately recognized hand have been smaller. Among the many three palms, the hour hand confirmed the best angular error earlier than fine-tuning, whereas the second hand confirmed the bottom.

Angular error by hand kind for predictions with and with out hand-role confusion, earlier than and after fine-tuning, within the modified-hand dataset.

To deal with directional errors alone, the evaluation was restricted to circumstances the place the mannequin accurately recognized every hand’s perform. If the mannequin had internalized a basic idea of time-telling, its efficiency on these examples ought to have matched its accuracy on customary clocks. It didn’t, and accuracy remained noticeably worse.

To look at whether or not hand form interfered with the mannequin’s sense of path, a second experiment was run: two new datasets have been created, every containing sixty artificial clocks with solely an hour hand, pointing to a distinct minute mark. One set used the unique hand design, and the opposite the altered model. The mannequin was requested to call the tick mark that the hand was pointing to.

Outcomes confirmed a slight drop in accuracy with the modified palms, however not sufficient to account for the mannequin’s broader failures. A single unfamiliar visible function appeared able to disrupting the mannequin’s general interpretation, even in duties it had beforehand carried out effectively.

Overview of GPT-4.1’s efficiency earlier than and after fine-tuning throughout customary, distorted, and modified-hand clocks, highlighting uneven positive factors and protracted weaknesses.

Conclusion

Whereas the paper’s focus could appear trivial at first look, it doesn’t particularly matter if vision-language fashions ever study to learn analog clocks at 100% accuracy. What provides the work weight is its deal with a deeper recurring query: whether or not saturating fashions with extra (and extra numerous) knowledge can result in the type of area understanding people purchase by abstraction and generalization; or whether or not the one viable path is to flood the area with sufficient examples to anticipate each possible variation at inference.

Both route raises doubts about what present architectures are really able to studying.

First revealed Monday, Might 19, 2025