LLMs have historically been self-contained fashions that settle for textual content enter and reply with textual content. Within the final couple of years, we’ve seen multimodal LLMs enter the scene, like GPT-4o, that may settle for and reply with different types of media, reminiscent of photographs and movies.

However in simply the previous few months, some LLMs have been granted a robust new means — to name arbitrary features — which opens an enormous world of attainable AI actions. ChatGPT and Ollama each name this means instruments. To allow such instruments, it’s essential to outline the features in a Python dictionary and absolutely describe their use and accessible parameters. The LLM tries to determine what you’re asking and maps that request to one of many accessible instruments/features. We then parse the response earlier than calling the precise operate.

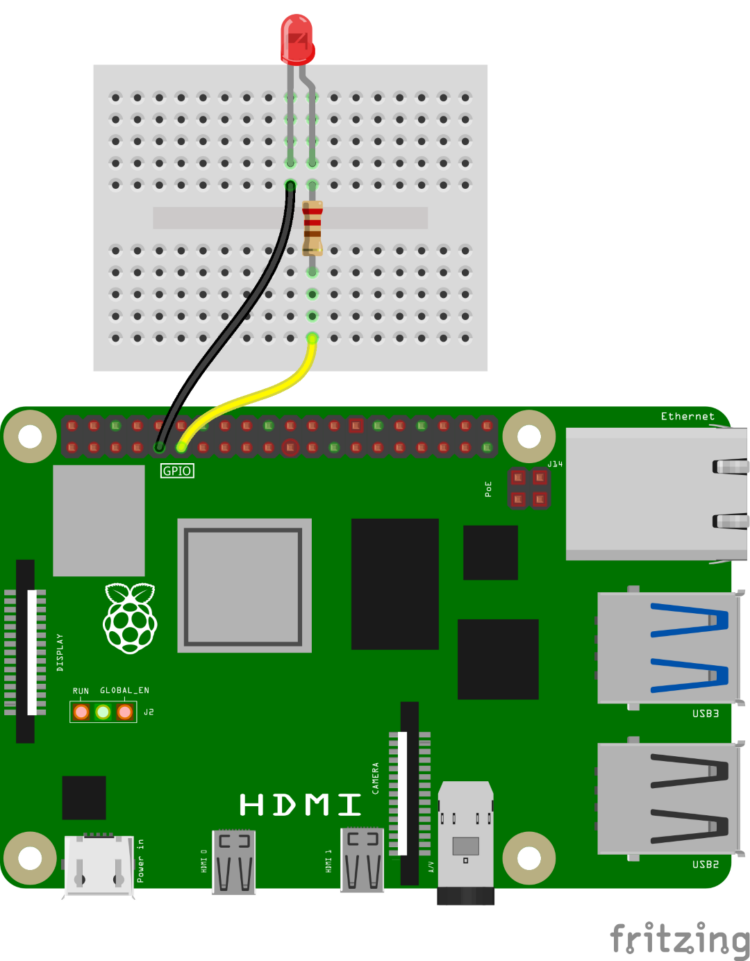

Let’s display this idea with a easy operate that turns an LED on and off. Join an LED with a limiting resistor to pin GPIO 17 in your Raspberry Pi 5.

Be sure to’re within the venv-ollama digital setting we configured earlier and set up some dependencies:

$ supply venv-ollama/bin/activate $ sudo apt replace $ sudo apt improve $ sudo apt set up -y libportaudio2 $ python -m pip set up ollama==0.3.3 vosk==0.3.45 sounddevice==0.5.0

You’ll have to obtain a brand new LLM mannequin and the Vosk speech-to-text (STT) mannequin:

$ ollama pull allenporter/xlam:1b $ python -c "from vosk import Mannequin; Mannequin(lang='en-us')"

As this instance makes use of speech-to-text to convey info to the LLM, you will want a USB microphone, reminiscent of Adafruit 3367. With the microphone linked, run the next command to find the USB microphone machine quantity:

$ python -c "import sounddevice; print(sounddevice.query_devices())"

It is best to see an output reminiscent of:

0 USB PnP Sound System: Audio (hw:2,0), ALSA (1 in, 0 out) 1 pulse, ALSA (32 in, 32 out) * 2 default, ALSA (32 in, 32 out)

Word the machine variety of the USB microphone. On this case, my microphone is machine quantity 0, as given by USB PnP Sound System. Copy this code to a file named ollama-light-assistant.py in your Raspberry Pi.

You may also obtain this file straight with the command:

$ wget https://gist.githubusercontent.com/ShawnHymel/16f1228c92ad0eb9d5fbebbfe296ee6a/uncooked/6161a9cb38d3f3c4388a82e5e6c6c58a150111cc/ollama-light-assistant.py

Open the code and alter the AUDIO_INPUT_INDEX worth to your USB microphone machine quantity. For instance, mine could be:

AUDIO_INPUT_INDEX = 0

Run the code with:

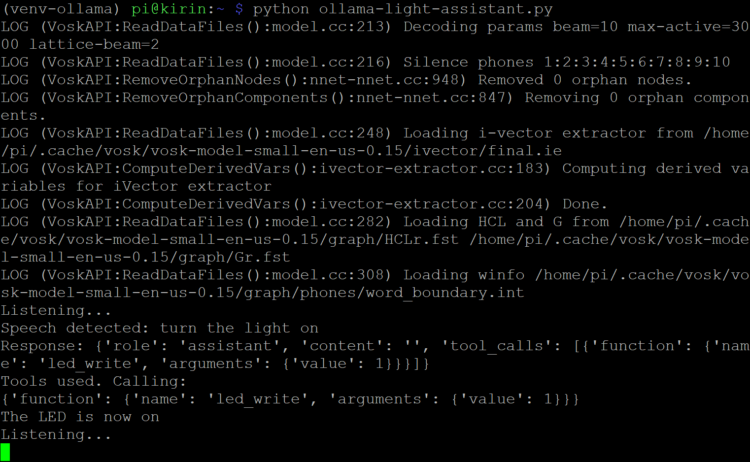

$ python ollama-light-assistant.py

It is best to see the Vosk STT system boot up after which the script will say “Listening…” At that time, strive asking the LLM to “flip the sunshine on.” As a result of the Pi isn’t optimized for LLMs, the response may take 30–60 seconds. With some luck, it is best to see that the led_write operate was known as, and the LED has turned on!

The xLAM mannequin is an open-source LLM developed by the SalesForce AI Analysis group. It’s skilled and optimized to perceive requests slightly than essentially offering text-based solutions to questions. The allenporter model has been modified to work with Ollama instruments. The 1-billion-parameter mannequin can run on the Raspberry Pi, however as you most likely seen, it’s fairly gradual and misinterprets requests simply.

For an LLM that higher understands requests, I like to recommend the Llama3.1:8b mannequin. Within the command console, obtain the mannequin with:

$ ollama pull llama3.1:8b

Word that the Llama 3.1:8b mannequin is sort of 5 GB. In the event you’re operating out of area in your flash storage, you’ll be able to take away earlier fashions. For instance:

$ ollama rm tinyllama

Within the code, change:

MODEL = "allenporter/xlam:1b"

to:

MODEL = "llama3.1:8b"

Run the script once more. You’ll discover that the mannequin is much less choosy concerning the actual phrasing of the request, nevertheless it takes for much longer to reply — as much as 3 minutes on a Raspberry Pi 5 (8GB RAM).

If you end up achieved, you’ll be able to exit the digital setting with the next command:

$ deactivate