When interacting with folks, robots and chatbots could make errors, violating an individual’s belief in them. After that, folks might begin to think about bots unreliable. Numerous belief restore methods carried out by smartbots can be utilized to mitigate the adverse results of those belief breaches. Nonetheless, it’s unclear whether or not such methods can absolutely restore belief and the way efficient they’re after repeated violations of belief.

Due to this fact, scientists from the College of Michigan determined to conduct a examine of robotic habits methods with a purpose to restore belief between a bot and an individual. These belief methods have been apologies, denials, explanations, and guarantees of reliability.

An experiment was performed by which 240 members labored with a robotic as a colleague on a activity by which the robotic typically made errors. The robotic would violate the participant’s belief after which recommend a selected technique to revive belief. The members have been engaged as crew members and the human-robot communication occurred by an interactive digital atmosphere developed in Unreal Engine 4.

The digital atmosphere of the members within the experiment to work together with the robotic.

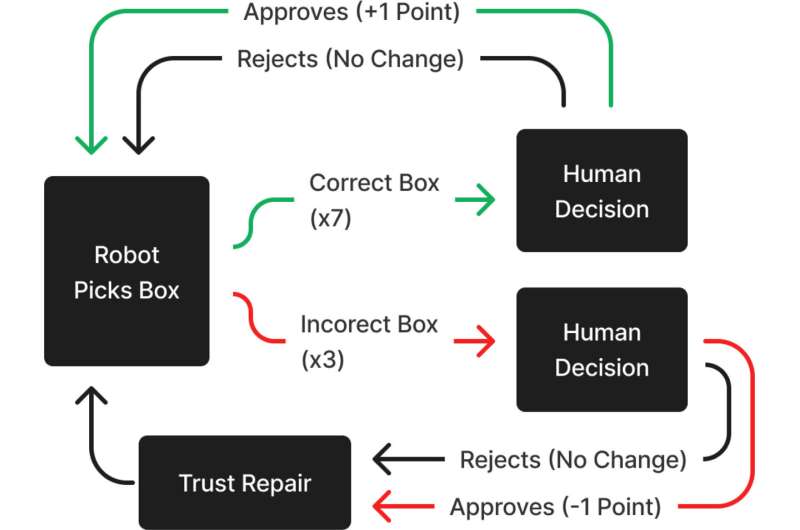

This atmosphere has been modeled to appear like a sensible warehouse setting. Individuals have been seated at a desk with two shows and three buttons. The shows confirmed the crew’s present rating, field processing pace, and the serial quantity members wanted to attain the field submitted by their robotic teammate. Every crew’s rating elevated by 1 level every time an accurate field was positioned on the conveyor belt and decreased by 1 level every time an incorrect field was positioned there. In circumstances the place the robotic selected the unsuitable field and the members marked it as an error, an indicator appeared on the display displaying that this field was incorrect, however no factors have been added or subtracted from the crew’s rating.

The flowchart illustrates the potential outcomes and scores based mostly on the bins the robotic selects and the choices the participant makes.

“To look at our hypotheses, we used a between-subjects design with 4 restore situations and two management situations,” mentioned Connor Esterwood, a researcher on the U-M College of Data and the examine’s lead writer.

The management situations took the type of robotic silence after making a mistake. The robotic didn’t attempt to restore the particular person’s belief in any manner, it merely remained silent. Additionally, within the case of the perfect work of the robotic with out making errors through the experiment, he additionally didn’t say something.

The restore situations used on this examine took the type of an apology, a denial, an evidence, or a promise. They have been deployed after every error situation. As an apology, the robotic mentioned: “I’m sorry I obtained the unsuitable field that point”. In case of denial, the bot acknowledged: “I picked the correct field that point, so one thing else went unsuitable”. For explanations, the robotic used the phrase: “I see it was the unsuitable serial quantity”. And eventually, for the promise situation, the robotic mentioned, “Subsequent time, I’ll do higher and take the correct field”.

Every of those solutions was designed to current just one sort of trust-building technique and to keep away from inadvertently combining two or extra methods. In the course of the experiment, members have been knowledgeable of those corrections by each audio and textual content captions. Notably, the robotic solely briefly modified its habits after one of many belief restore methods was delivered, retrieving the right bins two extra occasions till the subsequent error occurred.

To calculate the information, the researchers used a collection of non-parametric Kruskal–Wallis rank sum assessments. This was adopted by put up hoc Dunn’s assessments of a number of comparisons with a Benjamini–Hochberg correction to regulate for a number of speculation testing.

“We chosen these strategies over others as a result of knowledge on this examine have been non-normally distributed. The primary of those assessments examined our manipulation of trustworthiness by evaluating variations in trustworthiness between the proper efficiency situation and the no-repair situation. The second used three separate Kruskal–Wallis assessments adopted by put up hoc examinations to find out members’ rankings of means, benevolence, and integrity throughout restore situations,” mentioned Esterwood and Robert Lionel, Professor of Data and co-author of the examine.

The primary outcomes of the examine:

- No belief restore technique fully restored the robotic’s trustworthiness.

- Apologies, explanations and guarantees couldn’t restore the notion of means.

- Apologies, explanations and guarantees couldn’t restore the notion of honesty.

- Apologies, explanations, and guarantees restored the robotic’s goodwill in equal measure.

- Denial made it unimaginable to revive the thought of the robotic’s reliability.

- After three failures, not one of the belief restore methods ever absolutely restored the robotic’s trustworthiness.

The outcomes of the examine have two implications. In line with Esterwood, researchers must develop simpler restoration methods to assist robots rebuild belief after their errors. As well as, bots should make sure that they’ve mastered a brand new activity earlier than trying to revive human belief in them.

“In any other case, they danger shedding an individual’s belief in themselves a lot that it is going to be unimaginable to revive it,” concluded Esterwood.